The challenge: AI applications vs. data warehouse performance.

Your data science team builds a sophisticated recommendation engine on Snowflake.

The model is elegant and trained on years of customer behavior data, with impressive accuracy scores in offline testing. The Snowflake training pipeline runs smoothly, feature engineering is solid and model performance looks excellent.

Snowflake excels at what it was designed for: complex analytical queries, data warehousing and batch processing. It's the perfect foundation for model training and historical analysis. The plan seems logical: start with recommendations, expand to real-time personalization, add fraud detection, build a customer service chatbot. Snowflake is already your data foundation — why not make it your AI foundation too?

Then production users start hitting your AI applications.

Reality check: When performance hits production

Three weeks later, the metrics tell a different story:

Recommendation engines serve yesterday's trending products while users have moved to different categories. By the time batch jobs process new interactions and update the serving layer, user intent has shifted. Modern recommendation systems increasingly rely on vector embeddings for semantic understanding, requiring similarity searches that can't wait for batch processing windows.

Fraud detection systems take 3-8 seconds to score transactions — long enough for legitimate purchases to feel broken and actual fraud to complete while the system processes.

Customer service chatbots work with yesterday's data, missing recent orders, account changes and current session context that should inform every interaction.

AI-powered dashboards make business users wait for insights that should feel instant, leading to decisions based on stale information or intuition instead of real-time intelligence.

The pattern is clear: sophisticated models trapped behind infrastructure that can't match the pace of business.

Why Snowflake struggles with real-time AI applications

The problem isn't model quality, data accuracy or engineering capability. It's architectural mismatch.

Snowflake excels at analytical workloads — complex queries across massive datasets, batch processing, historical analysis and model training. It's optimized for thoroughness and scale, not operational speed.

AI applications need different performance characteristics: sub-second responses, real-time data access, high concurrency and consistent low latency. They're optimized for speed and user experience, which aligns with modern enterprise AI requirements.

Different jobs require different tools.

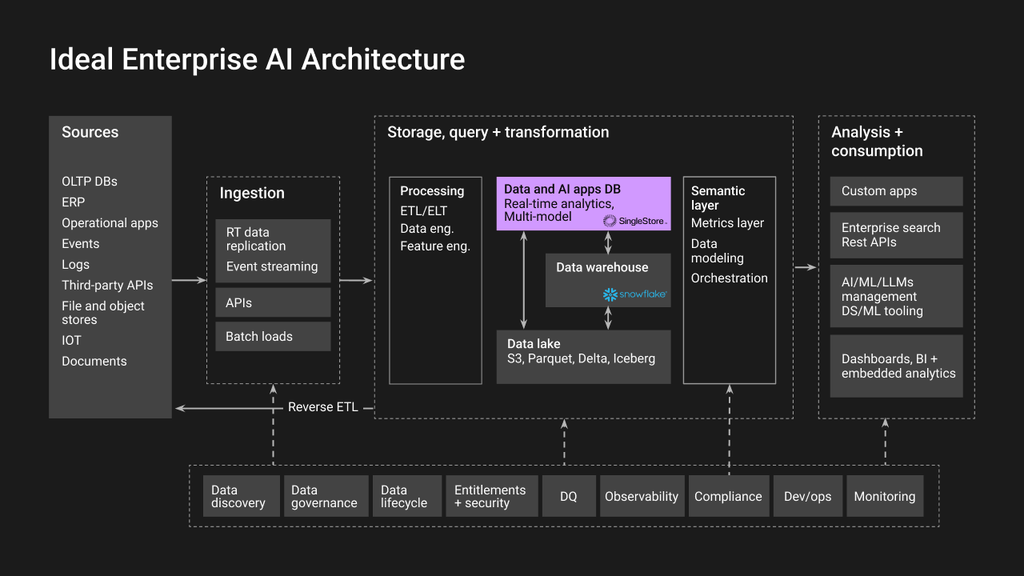

The modern AI architecture: Snowflake + SingleStore

Successful teams use specialized architecture: Snowflake for what it does best, plus a real-time layer for AI application serving.

Snowflake: The AI training ground

- Model development and training

- Feature engineering and data preparation

- Historical analysis and batch scoring

- Data warehousing and analytics

SingleStore: The AI application engine

- Real-time recommendation serving (<100ms response times)

- Sub-second fraud detection with fresh signals

- Conversational AI with current customer context

- Interactive dashboards that respond instantly

This approach preserves your existing Snowflake investment while adding the performance layer your AI applications need. Rather than forcing a single platform to handle conflicting requirements, you optimize each component for its specific strength.

Implementation guide: From prototype to production

Implementation follows a predictable pattern that minimizes risk while maximizing impact:

Week 1: Prioritize high-impact applications

Choose the AI feature causing the most user frustration — typically the one mentioned in every product meeting with qualifiers like "when it works." Focus on applications where performance directly impacts user experience or business outcomes.

Week 2-3: Build real-time data pipeline

Configure streaming synchronization from Snowflake to your real-time database for your chosen use case. Leading solutions like SingleStore provide Apache Iceberg integration, enabling seamless data access between systems without complex ETL pipelines. Modern real-time data processing architectures provide established integration patterns and tooling for this exact scenario.

Week 4: Deploy and measure

Launch AI applications using the real-time database for serving while maintaining all training and analytics on Snowflake. Implement comprehensive monitoring to track performance improvements and identify optimization opportunities.

Week 5+: Scale based on results

With proven performance improvements, expand to additional AI applications. Teams consistently report asking: "Why didn't we do this sooner?"

Expected performance results and ROI

The art of possible when the right performance engine complements Snowflake.

The figures below are representative of what teams have achieved in controlled benchmarks and early-access pilots that pair SingleStore for real-time workloads with Snowflake for deep historical analytics. They are not a guarantee — actual performance depends on data volume, schema design and workload mix — but they highlight the order-of-magnitude gains our customers routinely target.

Recommendation engines

- Response time: 2-8 seconds → <100ms

- Click-through rates: 4.7x improvement

- Revenue attribution: 18% vs. 8% previously

- Conversion rates: 2.3x increase

Fraud detection

- Detection speed: 3-8 seconds → <100ms

- Prevention rate: 94% of fraudulent transactions caught

- False positives: 12% → 3% with real-time context

- Business impact: $2.1M fraud prevented (first quarter)

Customer service AI

- Context accuracy: 60% → 95%

- First-contact resolution: 32% → 67%

- User satisfaction: 3.2/5 → 4.6/5

- Support ticket reduction: 40%

These results reflect the performance characteristics that SingleStore's architecture delivers for AI applications — combining the analytical power you already have in Snowflake with the operational speed that modern AI demands.

Strategic benefits of hybrid AI infrastructure

Infrastructure as competitive moat

Organizations building on dual-platform foundations establish technical advantages that compound over time. While competitors wrestle with fundamental infrastructure limitations — choosing between analytical depth and operational speed — teams with hybrid setups can optimize both simultaneously. This strategic flexibility becomes increasingly valuable as AI application complexity grows and performance requirements intensify.

Accelerated innovation velocity

Real-time AI infrastructure transforms how organizations experiment, learn and adapt. Product teams can test algorithmic improvements in production with immediate feedback loops, validating hypotheses in days rather than quarters. Engineering resources shift from infrastructure optimization to feature development. Data science teams iterate on models with live user interactions instead of theoretical offline metrics.

Unlocking new business models

The ability to serve AI applications at scale and speed enables business strategies that were previously impossible to execute:

- Real-time personalization at enterprise scale

- Dynamic pricing that responds to market conditions instantly

- Automated decision-making systems for mission-critical operations

- Interactive AI experiences that feel conversational rather than batch processed

Organizations with the platform advantages to support these capabilities can pursue strategic directions that competitors cannot practically execute.

How to improve AI application performance: quick reference

To optimize AI application performance on Snowflake:

- Use Snowflake for model training and analytics

- Add SingleStore for AI application serving

- Implement streaming data pipelines between systems

- Target sub-100ms response times for user-facing features

- Monitor performance metrics continuously

- Scale based on proven results

The path forward

Your Snowflake investment remains valuable for model training, feature engineering and analytics. But if AI applications feel sluggish, users lose patience or you spend more time optimizing warehouse performance than building features, the solution is architectural.

The modern approach: Use Snowflake for AI development and analytics. Use SingleStore for AI application serving. Optimize each component for its specific job.

This isn't about platform replacement — it's about using the right tool for each requirement and building AI applications that match user expectations. SingleStore's proven track record with enterprise AI applications, combined with seamless Snowflake integration, provides the performance foundation that sophisticated models deserve.

Ready to see what sub-100ms AI applications look like for your specific use cases? Get your free AI performance assessment →

Find out how your sophisticated models can run on an infrastructure that can keep up.

Frequently Asked Questions