This post is based on patterns I see regularly working with teams building real-time, data-intensive products. If any of this sounds uncomfortably familiar, you’re not alone.

The stack that works… until it really, really doesn’t

Here’s a story I see on repeat.

You start simple. An operational database for your application (Postgres or MySQL). Maybe MongoDB for flexible JSON or document-centric development. A few dashboards. Nothing exotic.

Then the business asks one totally reasonable question: “Can we see what’s happening right now?”

That’s the moment the “classic data stack” begins assembling itself.

You bolt on an OLAP system for dashboards. Someone asks for full-text search. Then semantic search. Then AI features. Then “agentic” workflows that need fresh, correct context, continuously.

None of those requests are outrageous. What is outrageous is what happens next: your database scalability pain starts propagating to every system in the stack, and teams burn through architectural bandaids faster than they can apply them.

This pattern shows up most often in modern SaaS, fintech, adtech, and other data-intensive platforms where real-time analytics, search, and AI move from “nice to have” to product-critical.

This post is about that pattern, i.e. why the classic database stack is so common, where it quietly breaks down under real-time and AI pressure, and why a unified real-time database changes the math.

What SingleStore is actually built for as a real-time database

In database terms, SingleStore is an HTAP (Hybrid Transactional / Analytical Processing) system, which is designed to serve operational and analytical queries on the same dataset in real time, without copying data into a separate warehouse.

In practice, that means supporting fast writes with strong integrity (system-of-record behavior), fast analytics over fresh operational data, and modern search patterns that increasingly show up in AI features, including vector similarity and hybrid search (vector + keyword).

If you want the formal overview, I recommend to start here: https://www.singlestore.com/cloud/

If you’re evaluating AI retrieval specifically: vector search in SingleStore and hybrid search patterns will get you going.

SingleStore also includes built-in ingestion capabilities (“Pipelines”) for streaming and batch sources like Kafka and object storage, without always requiring additional middleware just to keep data fresh.

That combination – operational workloads, analytics, and modern search in one system – is exactly what the classic stack struggles to approximate after the fact.

What “built on SingleStore” looks like in practice

This isn’t theoretical. SingleStore maintains customer stories across industries (retail, finance, adtech, cybersecurity, telecom, healthcare) where the common thread is real-time performance at scale. If you want the “who’s actually running this in production” check out some of our customer case studies.

And if you’ve ever felt the pain of asking search infrastructure to moonlight as an analytics engine, that problem is worth calling out directly. I previously wrote an article about it: Elastic OLAP weaknesses.

The point isn’t that every company needs hyperscale. It’s simpler than that. If real-time behavior sits in the critical path of your product experience, your data architecture can’t treat real-time as an edge case.

Why the classic OLTP and OLAP stack exists in the first place

Architecturally, the classic model separates systems of record (OLTP), analytical processing (OLAP), and search into distinct platforms; and that separation exists for a reason.

Postgres and MySQL are excellent at OLTP and data integrity. MongoDB can be a pragmatic choice for document-centric development. Snowflake and BigQuery are strong for large-scale analytics and BI. Databricks shines when you’re building a lakehouse-centric platform with heavy data engineering and ML. ClickHouse is extremely strong for analytical event workloads in the right shape.

None of these tools are “bad”. The problem shows up when a collection of specialized systems is asked to behave like a single real-time substrate for operational queries, analytics, search, AI retrieval, and continuous freshness—all at once.

How the classic stack quietly assembles itself

The blueprint is familiar. Teams start with MongoDB or another document store when the data is unstructured or still changing quickly. They rely on MySQL or Postgres for structured transactional workloads. As soon as analytics start to slow things down, an OLAP system gets added. When search becomes painful, full text search usually follows, often with Elasticsearch. And when AI features enter the picture, vector search or even a separate vector database shows up as well.

At that point, everything is held together with ETL and ELT jobs, CDC pipelines, caches, embedding workflows, and index rebuilds. This approach does work. Real products are built this way every day, and many teams reach impressive scale using exactly this pattern.

It is also common for a reason. Each decision makes sense in isolation. Each tool solves a very real problem at the moment it is introduced, even if the overall architecture becomes harder to reason about and harder to operate over time.

The built in failure mode boils down to the fact that new requirement leads to another system, and every new system introduces another place where performance, reliability, or cost eventually hits a ceiling.

How pain propagates across OLAP, search, and AI systems

The problems don’t show up all at once. They show up as a series of reasonable responses to new requirements, until those responses start interacting.

It usually starts with dashboards. When they’re slow, teams scale the analytics cluster, tune partitions, add caching, pre-aggregate, and adjust pipeline cadence. That’s all manageable—right up until dashboards are expected to reflect seconds-old data instead of hours-old data. At that point, analytics stops being downstream and moves directly into the critical path of the product.

The same tension appears with “real-time analytics”. If OLTP and OLAP live in different systems, real-time becomes a data movement problem. You’re dealing with CDC configuration, backfills, schema changes, late-arriving events, reconciliation windows, and correctness guarantees. Snowflake’s Snowpipe, Streams/Tasks, and Snowpipe Streaming are real capabilities—but they also make the underlying reality explicit: you’re operating pipelines just to keep the analytical state aligned with operational truth.

Specialised analytics systems bring their own tradeoffs. ClickHouse, for example, is exceptional at high-performance analytics. But like any purpose-built system, it makes explicit decisions around replication and consistency based on configuration and ingestion patterns. That’s not a criticism. It’s a design choice. The issue is when “bolt-on analytics” quietly turns into “bolt-on operational correctness”, and suddenly you’re having SLA conversations you never planned for.

Cost models can become the next constraint. BigQuery’s on-demand pricing works very well for certain workloads and very poorly for others—particularly scan-heavy, high-concurrency product interactions. Many teams don’t encounter this until product features start driving complex query patterns at scale. Again, not “bad,” just a cost model that penalises certain real-time usage patterns.

Then search enters the picture, and complexity multiplies. Full-text search brings its own indexing lifecycle, shard strategies, and failure modes. Vector search adds embedding growth, memory-intensive indexes, and recall-versus-latency tuning that quickly becomes a product requirement rather than an implementation detail. Even when each component is best-of-breed, you’re now operating a distributed application assembled from distributed systems.

This is where propagation kicks in. In the classic stack, ETL and CDC act as the circulatory system. When they slow – or break – everything downstream degrades. Dashboards drift. Search goes stale. Retrieval for RAG pulls outdated context. Fraud and personalisation features suffer. The response is predictable: scale the pipeline, the warehouse, the search cluster, the cache, the operational replicas.

There comes a point at which teams realise they’re no longer scaling a product. They’re scaling a platform.

AI agents tend to expose this fragility fastest. When AI agents hallucinate or underperform, the fix is rarely a better prompt. It’s usually better retrieval, fresher grounding data, tighter joins between context and truth, and stronger governance. In a classic stack, making those changes ripples through embeddings, pipelines, vector indexes, CDC flows, warehouse models, and caches.

At that stage, the overhead moves from being just technical to organisational.

Why unifying the critical path with SingleStore changes things

The case for SingleStore is not about having one database to rule everything. It is much simpler than that.

When real time behavior defines your product, what usually matters most is how many moving parts sit in the path that controls latency, correctness, and cost. The more systems involved in getting data in, serving operational queries, running analytics, and supporting AI retrieval, the harder it becomes to keep performance predictable and the harder it is to reason about failures when something goes wrong.

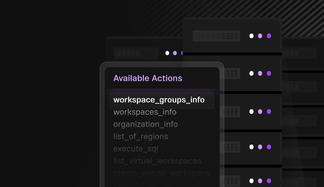

With SingleStore, the goal is to reduce how many separate systems are involved in those core paths. Ingestion, operational queries, real time analytics, and AI retrieval patterns like vector and hybrid search can all run in the same engine, instead of being stitched together across multiple services and layers of application code.

That does not mean there is nothing left to tune. There always is. The practical difference is whether you are tuning one system that understands the full workload, or trying to coordinate behavior across four or five different ones, each with its own scaling limits and operational quirks.

Cost isn’t just infrastructure. It’s compounded effort

At some point, leadership usually asks the right question: what does all of this mean for cost?

In a classic multi system stack, costs tend to compound in fairly predictable ways. The same data ends up stored in several places, so storage grows faster than usage. Each system needs its own compute headroom and peak capacity planning, even if it only handles part of the workload. Moving data between systems adds its own tax through CDC and ETL tools, failed jobs, backfills, and the operational burden of keeping pipelines healthy. On top of that, teams need specialists who know how to tune and operate each layer, which further increases long term cost.

What makes this especially painful is that spending rarely scales cleanly with users or event volume. It scales with requirements. Add real time dashboards and analytics has to scale. Add search and now search infrastructure needs to scale. Add vector workloads and suddenly embeddings, indexing, and memory footprints grow as well. Add governance and more tooling appears. Add agent driven workflows and freshness and correctness become non negotiable, which usually means even more systems and more redundancy.

SingleStore’s position is that a unified platform that scales linearly can reduce these surprise architecture taxes by removing duplication from the critical path, so growth in product capabilities does not automatically translate into growth in system sprawl.

A practical way to think about the decision

The classic stack works well when analytics can tolerate minutes or hours of freshness, search isn’t core to the product experience, AI features are experimental, and you have platform headcount to operate multiple systems.

You should strongly consider a unified real-time platform when your product depends on seconds-old data, needs operational and analytical queries on the same dataset, or is building search-heavy and AI-heavy experiences where latency and correctness are existential risks.

If you’re already deep into the classic stack, this doesn’t require a rip-and-replace fantasy. The pragmatic path I see working is keeping your system of record initially, moving real-time analytics and AI retrieval into a unified store, and reducing duplication over time as confidence grows.

Conclusion: treating real-time as the default, not the exception

The classic data stack isn’t wrong. It’s optimized for a world where analytics is downstream, search is separate, AI is experimental, and real-time is the exception.

That’s not the world most modern products are building for.

If your application depends on real-time decisions, personalization, risk controls, or AI-driven experiences, your data architecture has to treat real-time as the default—not the edge case.

SingleStore exists for that moment: when you stop scaling bandaids and start scaling the product.

Next step: Evaluate SingleStore Helios on a representative real-time workload to validate latency, concurrency, and cost characteristics in practice.