PostgreSQL (Postgres) is a dependable and widely used database, known for its strong SQL support and open-source flexibility. It’s often the first choice for developers building new applications thanks to its simplicity and mature ecosystem.

But as usage grows — more users, more data, more demands — teams often begin to notice the cracks. Scaling Postgres beyond a single node introduces real complexity. And when applications introduce AI, analytics or real-time workloads, the effort to maintain performance can outweigh the benefits of sticking with Postgres.

Let’s take a closer look at how scaling PostgreSQL typically unfolds, what bottlenecks start to appear and why many teams eventually look for alternatives.

The common scaling path for Postgres

When PostgreSQL starts to buckle under production workloads, the playbook is familiar:

1. Vertical scaling

The simplest move is to upgrade your instance: more RAM, more CPU, faster storage. But vertical scaling has diminishing returns and hits a hard ceiling eventually. And cost climbs fast.

2. Read replicas

Postgres supports asynchronous read replicas to offload query volume. But writes still flow through a single primary. As your application grows, you run into replication lag, failover complexity and routing logic to make sure reads go to the right place at the right time.

3. Partitioning

Postgres supports table partitioning — but it’s manual, brittle and requires careful planning. As partitions grow or shift over time, maintaining them adds complexity. Developers have to think not just about data, but how it’s laid out on disk.

4. Sharding with extensions (e.g. Citus)

Citus can distribute queries across shards, but fundamentally changes how Postgres works. You now need to think about distributed query planning, metadata synchronization and rebalance logic. At this point, Postgres is no longer “simple.”

5. Workarounds: Caching, queues, ETL pipelines

To get around Postgres’s limitations, teams often start adding supporting tools: Redis for faster reads, Kafka for ingestion or data pipelines to BigQuery or Snowflake for analytics. At first, these seem like targeted solutions. But each addition introduces latency, duplication and new points of failure.

Suddenly, your application isn’t running on a single, well-understood database but strung together across caches, queues, batch jobs and cloud services. Every new tool means more configuration, more monitoring and more things that can silently break at 3 a.m.

The operational cost rises, but so does the human cost. You don’t just need one backend engineer anymore, instead you need a caching specialist, a data engineer, someone to own the pipeline and someone else to maintain the warehouse queries. And once you have a pipeline, you probably need another person to QA the freshness and accuracy of data across systems.

It slows everything down. Teams end up spending more time keeping the system from falling over than actually building new features. Instead of working in one unified system, you're managing five partial systems — and trying to stitch together consistent answers across them.

Beyond architecture: the technical limits of Postgres

The challenges of scaling PostgreSQL aren’t just architectural; they're rooted in how it handles data under the hood.

Postgres is a row-based engine, making it well-suited for transactional operations. But when it comes to large-scale analytical queries, row-based storage slows things down. Scanning massive tables, computing aggregations or filtering across columns all become increasingly inefficient as datasets grow.

High write concurrency introduces another problem: lock contention. When multiple processes try to update the same rows or tables, Postgress transaction model can throttle performance. Combined with its write-ahead logging and single-node write architecture, this creates a natural ceiling for ingestion throughput. For workloads involving real-time data or streaming inserts, this limitation becomes increasingly painful.

Over time, performance tends to degrade. As tables grow, indexes bloat, queries slow down and vacuuming becomes more important and more unpredictable. The autovacuum process doesn’t always trigger when you need it and when it does, it consumes system resources or adds latency at the worst possible time.

To work around these limits, many teams adopt tools like DuckDB, a fast, in-process analytical database designed for columnar query workloads. DuckDB is excellent at slicing and aggregating large datasets and it’s often used alongside Postgres for dashboards, data science or AI pipelines. It fills the analytical gap Postgres struggles with, especially when teams pull data into memory for local processing or into a batch layer.

But DuckDB is not a transactional database. It doesn’t support concurrent users or real-time ingest. It’s a great companion, but not a replacement — adding speed, but also another layer to maintain. Now you’re dealing with two systems, two storage layers and the complexity of moving data between them.

This kind of patchwork architecture may work for a while, but as the system grows, these trade-offs compound. You’re not just tuning a database — you're managing a pipeline of tools that weren’t designed to scale together.

AI workloads push Postgres even further

Modern applications are increasingly integrating AI: embeddings, semantic search, real-time inference and hybrid query pipelines. These introduce new demands that Postgres wasn’t designed to handle.

Storing and querying vector embeddings with pgvector is possible, but limited by Postgres’s row-store architecture and single-node design. Real-time feature extraction and mixed transactional-analytical workloads push the engine beyond its comfort zone. AI agents and services that access data concurrently can trigger additional lock contention and throughput constraints.

The alternative: Unified, scalable SQL with SingleStore

Many teams reach a point where scaling Postgres becomes a full-time job. That’s when they begin exploring options like SingleStore.

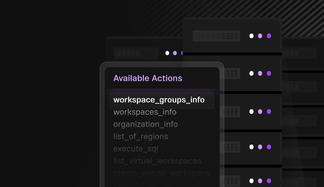

SingleStore offers a distributed SQL engine that supports both transactional and analytical workloads, all in one system. It handles scaling horizontally out of the box — without manual sharding or partition planning. Developers don’t need to bolt on additional tools just to make it work.

Unlike Postgres, SingleStore combines rowstore and columnstore formats in a single engine. It supports high-speed ingestion, real-time queries and large-scale analytics. Native vector search is built in, allowing teams to run similarity queries and structured joins in the same SQL workflow.

The result is a simpler, faster architecture where ingesting events, running inference and serving applications can happen all in one place — without the architectural workarounds and scale ceilings of Postgres.

Postgres is great — until it isn’t

Postgres remains an excellent database for many use cases. But when scale, performance and real-time demands start to collide, the friction adds up. You spend more time managing replication lag, tuning vacuum and compensating for write bottlenecks than building features.

If you’re at that point, it might be time to reevaluate whether your database is helping you grow — or holding you back.

SingleStore gives teams a way to move beyond the limits of Postgres, and unlock the speed and scale modern applications demand.

Frequently Asked Questions

.png?width=24&disable=upscale&auto=webp)

.jpg?width=24&disable=upscale&auto=webp)