Exploring Manhattan, Euclidean, Cosine and dot product methods.

In the multifaceted world of generative AI, data science, machine learning and analytics, understanding the distance and relationship between data points is crucial. This is how Large Language Models (LLMs) understand the context and retrieve the data. Large amounts of unstructured data can be easily stored in a vector database that is represented in Euclidean space, where data points are treated as vectors and distances are calculated using Cartesian coordinates and the Pythagorean theorem.

Whenever a user comes up with a query, the most approximate answer is retrieved from the database. It is amazing to see how the data is stored and how this calculation retrieves particular information.

There are many advanced ways to calculate the distance between the vectors. In this article, we are going to explore some important distance metrics like Manhattan distance, Euclidean distance, Cosine distance and dot product.

What are vectors in the context of machine learning?

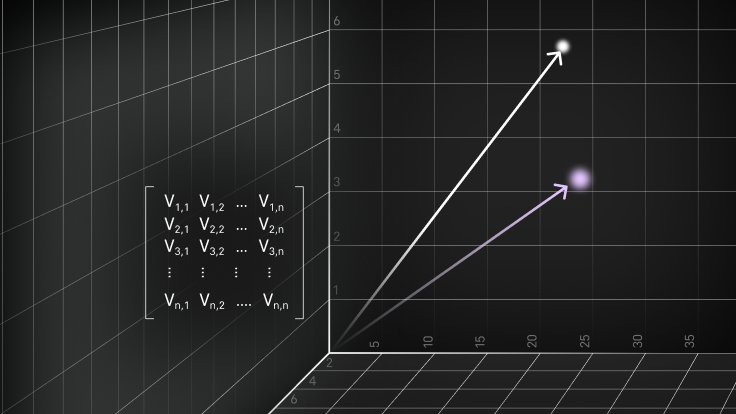

In the realm of generative AI, vectors play a crucial role as a means of representing and manipulating complex data. Within this context, vectors are often high-dimensional arrays of numbers that encode significant amounts of information. For instance, in the case of image generation, each image can be converted into a vector representing its pixel values or more abstract features extracted through deep learning models.

These vectors become the language through which AI algorithms understand and generate new content. By navigating and modifying these vectors in a multidimensional space, generative AI produces new, synthetic instances of data — whether images, sounds or text — that mimic the characteristics of the original dataset. This vector manipulation often involves calculating the distance between two vectors, where one vector is defined in relation to another, highlighting the mathematical operations involved in determining the distance. This process is at the heart of AI’s ability to learn from data and generate realistic outputs based on that learning.

What is vector similarity search?

Queries involve finding the nearest neighbors to a given vector in the high-dimensional space. This process, known as vector similarity search or Approximate Nearest Neighbor (ANN) search, looks for vectors that are closest in terms of distance (e.g., Euclidean distance or Cosine similarity) to the query vector. This method captures the similarity in context or features between different data points, crucial for tasks like recommendation systems, similarity searches and clustering.

Consider the unstructured data from various sources as shown in the previous image. The data gets through an embedding model (from OpenAI, Cohere or HuggingFace, etc.) to convert the content into small word chunks (the vector embeddings). Each word chunk is assigned with a numerical value and this process is known as tokenization. After the vector embeddings are created, they are represented in a three-dimensional space inside the vector database where all this vector data is stored.

In our case, as shown in the preceding image, the unstructured data ‘cat’ and ‘dog’ has been converted into vector data first, then represented in a three-dimensional space closer to each other since they both belong to the same pets category. If we consider one more example of a ‘car’ here, then it gets a representation far away from the ‘cat’ and ‘dog’ since it doesn’t fall under the pets category.

This way the similar objects are placed together and when a user query comes in, the context is considered and a more similar object is retrieved back to the user.

How vector similarity search is calculated

There are different techniques to calculate the distance between the vectors. Dot product, Euclidean distance, Manhattan distance and cosine distance are all fundamental concepts used in vector similarity search. Each one measures the similarity between two vectors in a multi-dimensional space.

Manhattan distance

Manhattan distance is a way of calculating the distance between two points (vectors) by summing the absolute differences of their coordinates. Imagine navigating a city laid out in a perfect grid: the Manhattan distance between two points is the total number of blocks you'd have to travel vertically and horizontally to get from one point to the other, without the possibility of taking a diagonal shortcut. In the context of vectors, it's similar: you calculate the distance by adding up the absolute differences for each corresponding component of the vectors.

Euclidean distance

Euclidean distance, often known as the L2 norm, is the most direct way of measuring the distance between two points or vectors, resembling the way we usually think about distance in the physical world. Imagine drawing a straight line between two points on a map; the Euclidean distance is the length of this line.

This metric is widely used in many fields including physics for measuring actual distances, machine learning algorithms for tasks like clustering and classification, and in everyday scenarios whenever a direct or as-the-crow-flies distance needs to be determined. Its natural alignment with our intuitive understanding of distance makes it a fundamental tool in data analysis and geometry.

Cosine distance

Cosine distance is a measure of similarity between two non-zero vectors that evaluates the cosine of the angle between them. It's not a 'distance' in the traditional sense, but rather a metric that determines how vectors are oriented relative to each other, regardless of their magnitude. Picture two arrows starting from the same point; the smaller the angle between them, the more similar they are in direction.

This measure is particularly useful in fields like text analysis and information retrieval, where the orientation of the vectors (representing, for example, documents or queries in a high-dimensional space) matters more than their absolute positions or magnitudes.

Dot product

The dot product captures the relationship between two vectors. Imagine two arrows extending from the same starting point; the dot product quantifies how much one arrow aligns with the direction of the other. Mathematically, it's calculated by multiplying corresponding components of the vectors and then summing these products.

A key characteristic of the dot product is that it's large (positive or negative) when vectors point in similar or opposite directions, and small (or zero) when the vectors are perpendicular. This makes the dot product extremely useful in various applications, like determining if two vectors are orthogonal, calculating the angle between vectors in space or in more complex physics and engineering operations like computing work or torque. In machine learning and data science, the dot product is instrumental in algorithms like neural networks, where it helps in calculating the weighted sum of inputs.

Handling vectors of different length

When dealing with vectors of different lengths, it’s essential to understand how to handle them correctly. In many cases, vectors of different lengths can be treated as if they had the same length by padding the shorter vector with zeros. This approach is particularly useful when working with vectors in high-dimensional spaces.

For example, consider two vectors, vector a and vector b, with lengths 3 and 4, respectively. To calculate the Euclidean distance between them, we can pad the shorter vector, vector a, with a zero to make it the same length as vector b. The resulting vectors would be:

a = (a1, a2, a3, 0)

b = (b1, b2, b3, b4)

We can then calculate the Euclidean distance between the two vectors using the standard formula:

d = √((a1 - b1)^2 + (a2 - b2)^2 + (a3 - b3)^2 + (0 - b4)^2)

This approach allows us to calculate the distance between vectors of different lengths, which is essential in many applications, such as information retrieval and machine learning. We can apply various distance metrics consistently and accurately by ensuring that vectors are of the same length.

Best practices for distance calculation

When calculating distances between vectors, there are several best practices to keep in mind:

- Choose the right distance metric: Depending on the application, different distance metrics may be more suitable. For example, Euclidean distance is commonly used in many applications, while Manhattan distance may be more suitable for certain types of data. Understanding the nature of your data and the requirements of your application will help you select the most appropriate metric.

- Use the correct formula: Make sure to use the correct formula for the chosen distance metric. Using the correct formula ensures accurate distance calculations between two vectors.

- Handle vectors of different lengths correctly: As discussed earlier, when dealing with vectors of different lengths, it’s essential to pad the shorter vector with zeros to make it the same length as the longer vector. This ensures that all components are accounted for in the distance calculation, providing a more accurate measure of similarity or dissimilarity.

- Use efficient algorithms: Depending on the size of the vectors and the number of calculations, using efficient algorithms can significantly improve performance. For example, using a loop to calculate the distance between two vectors can be slower than using a vectorized operation. Leveraging libraries like NumPy can help optimize these calculations, especially when working with large datasets or high-dimensional vectors.

By following these best practices, you can ensure that your distance calculations are accurate, efficient, and suitable for your specific application. Whether you are working in machine learning, data analysis, or information retrieval, these guidelines will help you make the most of your vector data.

Tutorial

We will use SingleStore Notebooks to perform this tutorial. If you haven’t already, activate your SingleStore trial free to get started. Let’s dive deeper into the previously mentioned approaches through a hands-on tutorial.

Let's take an example of two pets (cat and dog) and visualize them in a 3D space. We will try to find the Manhattan distance, Euclidean distance, Cosine distance and dot product between these two pets.

Once you sign up, click on the Notebooks tab and create a blank Notebook with a name.

You can start running the code in your newly created Notebook. First, install and then import the required libraries.

1

!pip install numpy2

!pip install matplotlib3

4

import matplotlib.pyplot as plt5

import numpy as np

Define attributes of the pets that can be represented in three dimensions. Since pets are complex entities with many characteristics, you'll need to simplify this to a 3D representation. Here's an example:

1

from mpl_toolkits.mplot3d import Axes3D2

3

# Example pets attributes: [weight, height, age]4

# These are hypothetical numbers for illustration purposes5

dog = [5, 30, 2]6

cat = [3, 25, 4]7

8

fig = plt.figure()9

ax = fig.add_subplot(111, projection='3d')10

11

# Plotting the pets12

ax.scatter(dog[0], dog[1], dog[2], label="Dog", c='blue')13

ax.scatter(cat[0], cat[1], cat[2], label="Cat", c='green')14

15

# Drawing lines from the origin to the points16

ax.quiver(0, 0, 0, dog[0], dog[1], dog[2], color='blue',17

arrow_length_ratio=0.1)18

ax.quiver(0, 0, 0, cat[0], cat[1], cat[2], color='green',19

arrow_length_ratio=0.1)20

21

# Labeling the axes22

ax.set_xlabel('Weight (kg)')23

ax.set_ylabel('Height (cm)')24

ax.set_zlabel('Age (years)')25

26

# Setting the limits for better visualization27

ax.set_xlim(0, 10)28

ax.set_ylim(0, 40)29

ax.set_zlim(0, 5)30

31

# Adding legend and title32

ax.legend()33

ax.set_title('3D Representation of Pets')34

35

plt.show()

Upon executing the code, you should be able to see the representation of pets mentioned in this 3D space.

Now, let’s calculate the distance between the vectors using various techniques.

Manhattan distance

1

L1 = [abs(dog[i] - cat[i]) for i in range(len(dog))]2

manhattan_distance = sum(L1)3

4

print("Manhattan Distance:", manhattan_distance)

Euclidean distance

1

L2 = [(dog[i] - cat[i])**2 for i in range(len(dog))]2

3

L2 = np.sqrt(np.array(L2).sum())4

5

print(L2)

Cosine distance

1

cosine = np.dot(dog, cat) / (np.linalg.norm(dog) *2

np.linalg.norm(cat))3

print(cosine)

Dot product

1

np.dot(dog,cat)

The complete code of this tutorial can be found here in this Github repository: Representing Unstructured Data as Vectors.

SingleStore provides direct support for dot product and Euclidean distance using the vector functions DOT_PRODUCT and EUCLIDEAN_DISTANCE , respectively. Cosine similarity is supported by combining the DOT_PRODUCT and SQRT functions.

SingleStore supports both full-text and vector search, giving developers the best of both worlds. The 8.5 release updates from SingleStore have opened many doors — making big waves in the generative AI space.

Curious to test the power of SingleStore for AI? Get started with SingleStore free today.