The SingleStore Aura container platform now offers credit-based pricing for container resources, enabling teams to build and scale data and AI applications with greater flexibility. This update applies to our SingleStore Notebooks, Jobs, Cloud Functions and future Aura Apps.

Note: These changes are in preview and will be rolled out in the coming weeks to all Standard and enterprise customers.

The problem we're solving

Development teams need flexible compute resources to handle varying workload demands from exploratory data analysis in notebooks to production batch processing jobs and serverless functions. Fixed resource limits often force teams to either over-provision or work around constraints, neither of which is optimal for productivity or cost.

Our new container-based compute model addresses this by letting you:

Choose the right container size for each workload

Scale resources up or down based on actual needs

Run unlimited scheduled jobs without hitting container limits

Access GPU resources for AI/ML workloads when needed

Available container types

Small containers

Ideal for lightweight data exploration, simple queries and development work

Suitable for most notebook sessions and basic ETL jobs

Medium containers

Designed for production workloads, complex data processing and multi-stage pipelines

Recommended for scheduled jobs with moderate resource requirements

GPU containers

Purpose-built for machine learning model training and inference

Accelerated computing for vector operations and AI workloads

Using containers across Aura applications

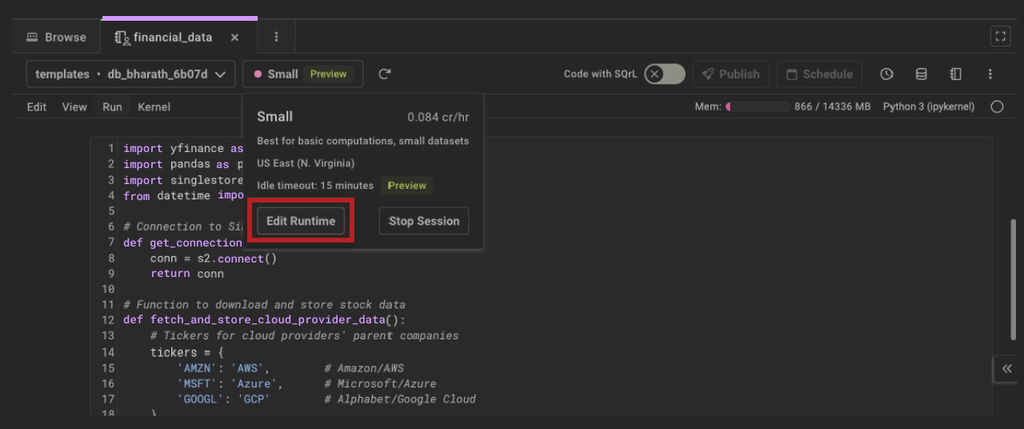

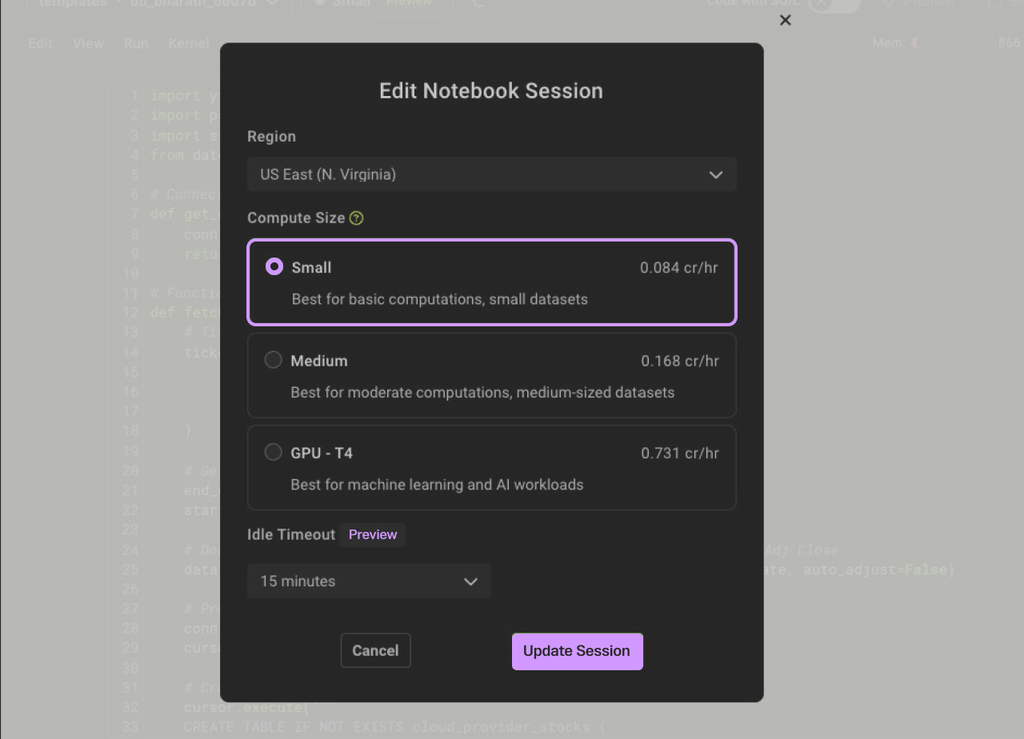

SingleStore Notebooks

When creating or running a notebook, select your container size based on the complexity of your analysis:

Use small containers for data exploration and query development. Switch to medium or GPU containers when working with large datasets or running ML models.

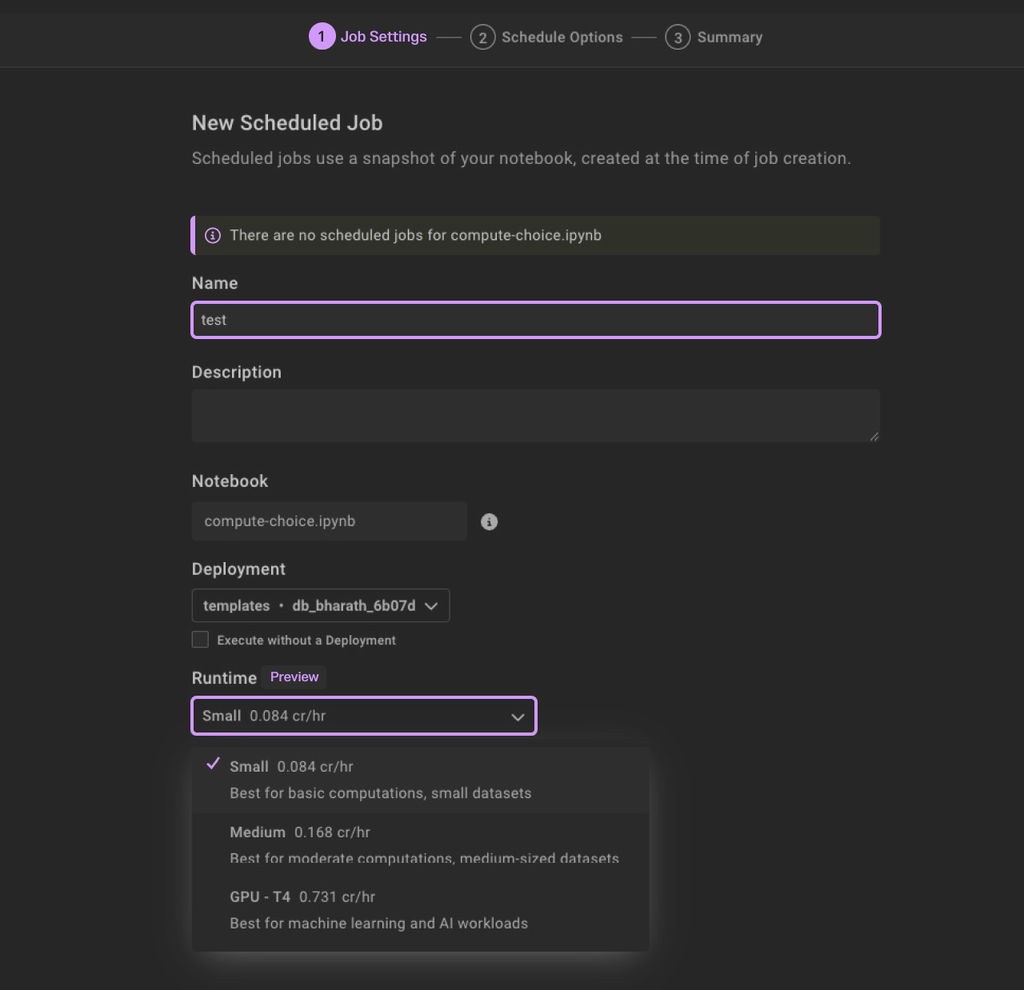

SingleStore Jobs

Configure scheduled jobs to run with appropriate container resources:

Select container size based on job requirements — small for simple data movement, medium for complex transformations or GPU for ML pipeline jobs.

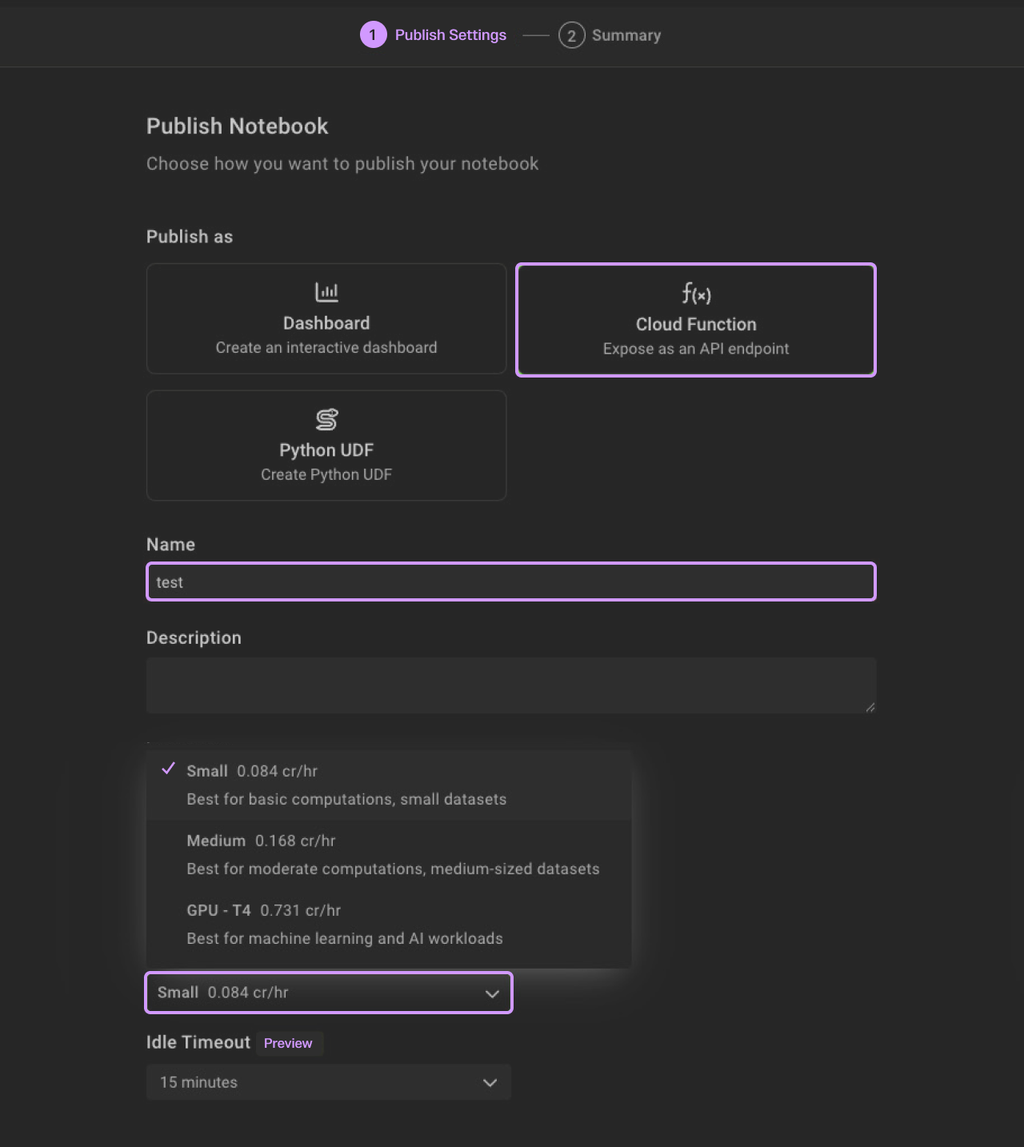

Cloud Functions

Deploy serverless functions with configurable compute:

Match container size to function complexity and expected load. Functions automatically scale within the selected container type.

Practical use cases

Data engineering pipeline

A typical ETL pipeline might use:

Small container for initial data validation functions

Medium container for the main transformation job

Small container for final data quality checks

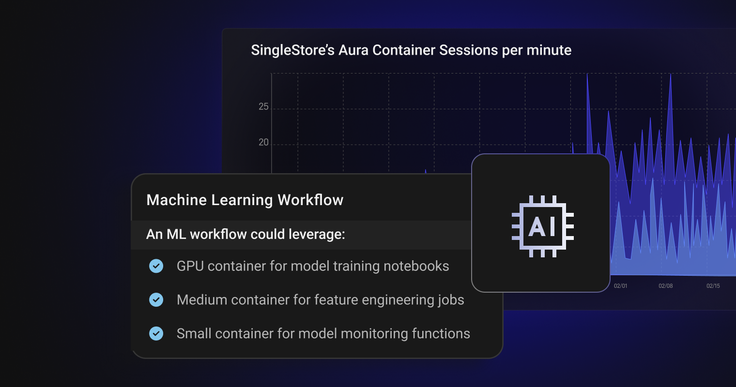

Machine learning workflow

An ML workflow could leverage:

GPU container for model training notebooks

Medium container for feature engineering jobs

Small container for model monitoring functions

Real-time analytics

For streaming analytics:

Medium containers for continuous processing jobs

Cloud functions with small containers for event-driven processing

Notebooks with varying sizes for ad-hoc analysis

Managing resources effectively

Usage safeguards

To prevent accidental resource consumption:

Maximum 50 active container sessions per organization

Maximum 20 new container sessions per minute

These limits protect against runaway processes while allowing legitimate scaling needs. We will provide admins options to configure limits per aura workload in the near future.

Role-Based Access Control

Administrators can control who creates and manages container workloads:

Assign permissions for creating notebooks, jobs and functions

Set limits on container types available to different teams

Monitor usage across the organization

Getting started and best practices

1. Assess your workload requirements

Identify which processes need small, medium or GPU containers

Estimate usage patterns for different team workflows

Plan resource allocation across projects

2. Optimize container usage

For Notebooks:

Default to small containers for exploration

Upgrade to larger containers only for intensive computations

Terminate idle sessions to avoid unnecessary charges

For Scheduled Jobs:

Profile job requirements before selecting container size

Use smaller containers for frequent, lightweight jobs

Reserve medium/GPU containers for complex batch processing

For Cloud Functions:

Match container size to expected request volume

Use small containers for simple transformations

Consider medium containers for complex logic or high throughput

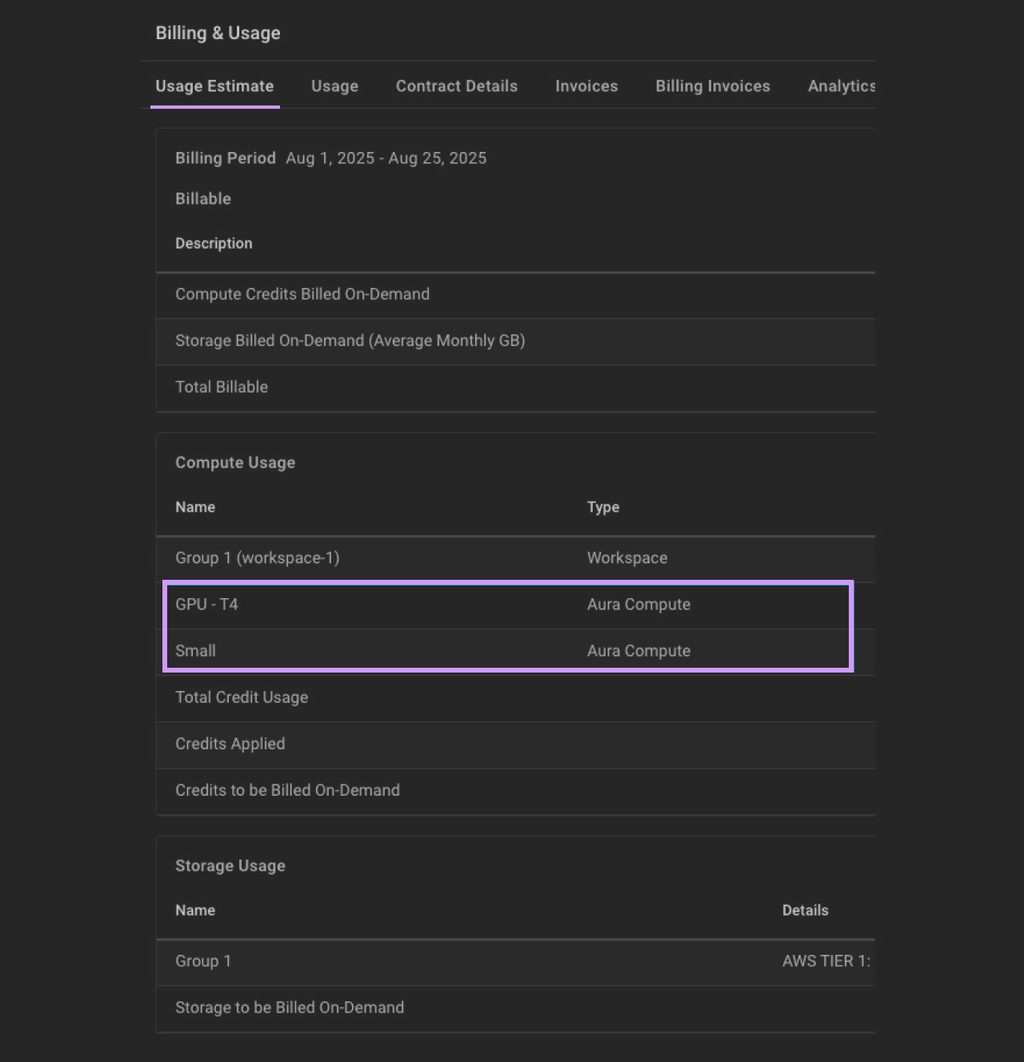

3. Usage and tracking

Head to the Billing & Usage Page to estimate your monthly usage and view the breakdown of Aura Apps consumption

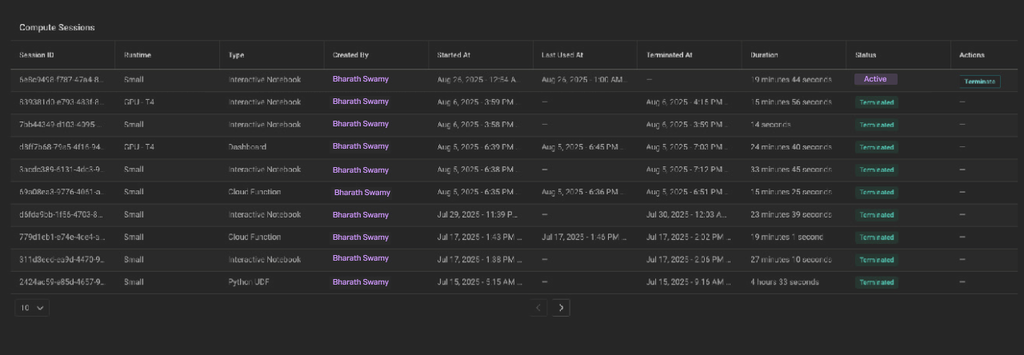

As an Aura Admin you can check your Aura resources consumption and usage by heading over to the Aura Compute sessions page.

Future plans

1. Improved visualizations

We are working on providing better visualizations and breakdown of individual app usage in the near future for better app usage monitoring and observability.

2. Ability to configure access controls for each specific role

Set up RBAC to ensure appropriate access:

1Admin → Manage all container types2Data Scientists → Access GPU containers for ML work3Analysts → Use small/medium containers for analysis4Developers → Deploy functions with defined container limits

Support and resources

Check out our complete Aura Documentation to understand all the features we offer and don't hesitate to reach out to provide feedback, suggestions for any new feature that you’d want us to prioritize in our product roadmap.

Frequently Asked Questions

.jpg?width=24&disable=upscale&auto=webp)