The ability to change a table’s schema without downtime in production is a critical feature of any database system. In spite of this, many traditional relational databases have poor support for it. Quick and easy schema changes was a key advantage of early distributed NoSQL systems, but of course, those systems jettison relational capabilities.

Though conventional wisdom may indicate otherwise, easy schema changes are possible with the relational model. At SingleStore we put careful thought and effort into making sure that ALTER TABLE operations have minimal impact to running workloads. This feature is commonly called an “online” ALTER TABLE. Most relational databases support the notion of an “online” ALTER TABLE, but every vendor has a different definition of what that means. In SingleStore we define a true online ALTER as one that:

1) Does not require doubling the disk or memory use of the table while executing (creating a 2nd copy of the table without destroying the original table is not allowed)

2) Does not lock the table or prevent querying it for long periods of time (read or write) while running (under a second of blocking queries is OK)

3) Does not use excessive system resources while running (CPU, Disk, Network) no matter the size of the table or the workload running against the table

SingleStore is the only distributed relational database able to achieve all three. For example, MySQL Cluster fails to do (1) – it copies the table in many cases. VoltDB, Vertica, and Redshift fail to do (2) – they lock the table throughout the entire ALTER operation, effectively taking down your production system, or requiring tedious juggling of replicas.

Explaining how our ALTER TABLE works it best done by stepping through an example. Let say we wanted to add a column to a table as follows:

CREATE TABLE example(c1 int primary key);

ALTER TABLE example ADD COLUMN c2 VARCHAR(100) DEFAULT NULL;

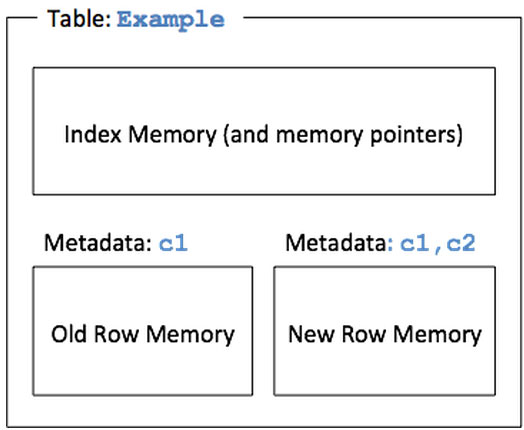

Consider this diagram while we outline how ALTER runs through four phases of execution in the SingleStore rowstore.

The first thing SingleStore does is change the structure (also called the metadata) of the table on every node in the cluster to include the new column, c2 as shown above “New row memory”. A short-lived table lock that blocks queries from executing against the table is held for the duration of the metadata change, which takes less than a second. The distributed lock is required to synchronize all nodes in the cluster so they will start displaying the new column at the same time. No row data has been modified at this point, only the table’s metadata.

Now, SELECT queries against example will see the newly added column, but the column won’t actually exist in the table’s rows just yet. During query execution SingleStore generates the default value for c2 on the fly (NULL in our example) wherever it needs to.

INSERTs (or any write query) will now need to provide data for c2, or c2 will default to NULL. These new rows will be inserted into the table alongside the old rows that don’t yet have c2. We are able to do this because, in SingleStore, indexes are allocated in a separate memory space from the memory for row data (see diagram). Whenever an index needs access to a row it follows a memory pointer. This design allows memory for rows inserted with c2 (inserted after step 1 of the ALTER) to co-exist with rows allocated before the metadata switch that added c2.

A new memory space is set up at the time of the metadata change (“New Row Memory” in the diagram) to allocate rows for the new table schema. Newly inserted rows are allocated in this memory region. The original table rows remain in the “Old Row Memory” region.

- The ALTER now begins transferring the row data from the old row format to the new format. We can do this fairly straightforwardly using the memory space for the old rows (“Old Row Memory” above). The transfer process is essentially the same as a series of table updates. The alter thread commits every few megabytes of rows to avoid duplicating the entire table in a single transaction. It will eventually shrink the old row memory to nothing. Production workloads are happening all throughout this process. They simply check each row to determine if it has the new column or not and patches appropriately. The transfer process can take a while for larger tables, but it doesn’t impact running queries beyond using some CPU.

Our ALTER implementation does have some caveats as a result of our requirement to make the operation a low-impact as possible.

- An ALTER TABLE can’t be cancelled after phase 1 above has completed. The row transfer process (phase 3) is not transactional (this would require doubling memory use) so it can’t be rolled back or stopped once it starts.

- The blocking at phase 1 forces the ALTER to wait for any long running query to finish executing before it starts.

- Some ALTER operations cannot be performed online. For example, you can’t add UNIQUE keys online in the current version SingleStore because the ALTER may run into a duplicate key error in the middle of the operation. Consequently, commands of that sort are locked out.

- Only one ALTER can be run against a table at a time. Since an ALTER is essentially a long-running query, a second ALTER waits until the first one completes. However, you can ALTER different tables at the same time.

In summary, our online ALTER TABLE support novel capability you get when using SingleStore. Adding new indexes or columns without downtime is something our larger enterprise users rely on. Having both the flexibility of painless schema changes and the high powered querying capabilities of a distributed in-memory database is a unique combination.