Our customers love JSON. It's by far the most popular advanced data type in SingleStore beyond traditional numbers, strings, dates and times. Most JSON data has a regular structure, but lots of users push the envelope with complex or unusual JSON structures — particularly sparse JSON (more on that later). Because JSON is so popular, we're continually working to improve our ability to store and query it easily and efficiently.

In this blog, we’ll share how we dramatically improved our sparse JSON handling, enabling us to handle a whole new class of applications. Warning: the writing ahead is wonky in parts — but if you persevere, we think you will come out on the other side with an appreciation for the power of SingleStore, and how it handles JSON for all kinds of apps!

We released our new JSON encoding supporting fast seeks in SingleStore 8.0 after collaborating with our design partners at Heap. They suffered serious performance problems with sparse JSON prior to our changes, and are now getting much better speed. We built these improvements to sparse JSON handling to help them and because we realized many other current and potential customers have similar needs. Here's what Heap had to say about the ultimate results:

"One of the most powerful features of the Heap Platform is the ability to add attributes to the user & event data model. Customers send attributes as unstructured JSON documents that are stored in SingleStore for queries. Previously we had huge problems in both the write & read path due to the large variety of JSON schemas our customers can send – making schema inference via sampling impossible. With SingleStore's new JSON encoding engine, we achieved a massive improvement on data ingest performance. It was fantastic to collaborate on a feature with SingleStore's teams – they took the time to understand our use cases and validate their design." — Cameron Newman, Director of Platform Engineering, Heap

Heap allows app and web developers to instrument their applications easily, and provides analytics so they know exactly what users are doing on their site. As we dive into the investigation we conducted to solve Heap's Sparse JSON problem, let's first set the stage with some background information.

Background

SingleStore columnstore tables are broken into one-million-row chunks called segments. Within each segment, columns are stored independently — in contiguous parts of a file, or in a file by themselves. These stored column chunks are called column segments, and the best part is they are seekable. When we store JSON data, we do something special.

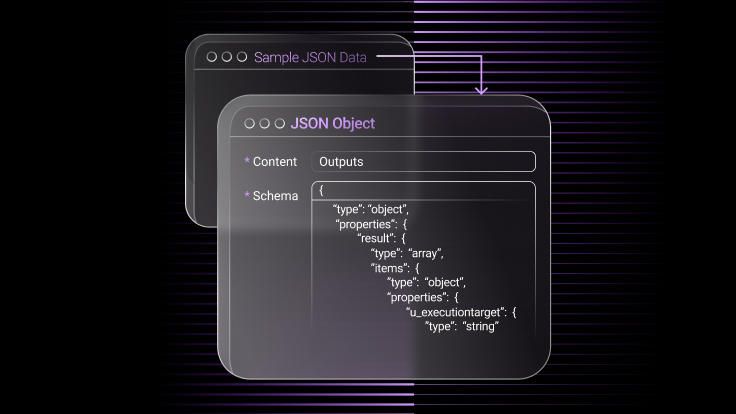

We infer a schema for the one million JSON documents we want to store, and columnarize it using a format inspired from Apache Parquet. The columnarization is done by inferring key paths and storing some additional data per key path to reconstruct the document from these key paths. The key paths are then stored in the same encodings where we store regular columns (as internal columns).

The benefit of this approach is that using SingleStore’s own encodings gives us fast seeks (or row level decoding), and using the columnarized JSON splitting approach gives us good compression, allowing us to only decode the keys we need.

This works great for typical JSON use cases where schemas are dense, i.e. most keys appear in most documents since the columnarizing approach leads to a densely populated internal column. But in cases of sparse JSON (i.e., each key comes in only a few documents) our approach ends up using a lot of memory and processing time.

Not to spoil the ending, but we solve the issues that come up in the investigation. The new encoding can now support a wider range of JSON data efficiently — including Heap’s use case, making them happy which makes us happy. Read on to see what the problems were and how we solved them.

The journey to great sparse JSON handling

Where did we leave off? Ah, we didn’t see the expected performance improvement for the encoding. To be specific, the new encoding offered row-level seeks so filtering on the JSON data should have been almost as fast as if the key path was a regular column. After some joint investigation, we figured out the data was stretching our schema inference safeguards (so as to not infer too many internal columns) and due to the schema inference fallback, we weren’t seeing the performance improvement we expected.

We made a patch loosening the schema inference safeguards; and when Heap tried some test data on the new build, they saw the expected performance improvements. Then they started heavier tests that ingested more data, resulting in pipeline failures and out-of-memory (OOM) errors everywhere.

Compression challenges

This was a new challenge, we could see through logs the compression of the JSON data was failing. Why was the new encoding taking so much more memory to compress for sparse cases? The answer to this is in the columnarizing of the JSON.

Using the following JSON data table:

1

{“a”: 1}2

3

{“b”: 1}4

5

{“c”: 1}6

7

{“d”: 1}8

9

{“e”: 1}

The JSON rows will be encoded as follows:

| a | b | c | d | e |

| 1 | NULL | NULL | NULL | NULL |

| NULL | 1 | NULL | NULL | NULL |

| NULL | NULL | 1 | NULL | NULL |

| NULL | NULL | NULL | 1 | NULL |

| NULL | NULL | NULL | NULL | 1 |

These extra NULLs were going to be a problem for both memory and execution time. What we're seeing here is a sparse-matrix-like problem, but with the added complexity of arbitrarily nested JSON. How can we avoid spending time and memory on all these NULLs?

This was solved using an algorithm inspired from run-length encoding. Run-length encoding is an encoding that stores runs of data (sequences in which the same data value occurs in many consecutive data elements) as a pair of value and count. The tricky aspect here was due to the nested structure of JSON. During columnarizing, for a missing key, different data is stored depending on which ancestors of the missing key were present in the document. We ended up using a cascading-trigger-based approach where state change at an ancestor key would trigger state change at a descendent key, flushing state to the internal column.

Further compression challenges

This fixed our compression issue, so are we done? Nope. We give a new build to Heap and Heap runs the same tests that they tried last time — and even though the ingestion pipeline made more progress, it ended up with OOM errors again. Scraping through the logs, we now see data scan causing OOM errors.

Now, why on earth would ingestion pipeline code be calling scan? To understand this we need some context on how data is ingested in SingleStore. Ingestion pipelines internally run LOAD DATA queries; at the end of a load data query we merge segments and rewrite them in sorted order. This sorting of segments at the end of load query requires reading of the segments.

By this point, we knew this problem was bigger than what we had estimated. To make sure we fixed it correctly — instead of kicking the can down the road — we decided it was time to buckle down, take out a yellow legal pad and write down all the places we had memory usage and address them one by one.

Memory allocation optimization

During scan, each internal column requires its own iterator. Since this code is shared with top-level columns, the iterator has its own allocators which end up pre-allocating memory. This isn’t an issue when there are a few top-level columns to iterate through, but when we have hundreds to thousands of internal columns, the pre-allocated memory adds up.

To fix this, we now share an allocator across the internal columns. This same problem exists in the streaming interface used by the internal columns to read from files, so we use the shared allocator here too to reduce the pre-allocation.

Read null materialization

Finally, we have a problem similar to our compression issue. While reading the data from the disk, each internal column decompresses all the NULLs as an intermediate state.. This was fixed by creating an intermediate interface which could store the intermediate state in run-length format to avoid materializing the NULLs.

Conclusion

We gave a private build to Heap, with prototypes of these fixes and the pipeline ingestion succeeded without OOMs. And here we have it folks, finally we have reached the end of all the fixes in this journey. There was more shooting down in the trenches — like the addition of an information schema table to see the schema inferred per segment, the addition of memory instrumentation to figure out memory issues quicker and reducing the number of segments being decoded at once during the post sort — but I won’t go into them for now.

Summary

If there is one takeaway from reading this, it’s that SingleStore has made strides to make using JSON much more user friendly and allowing wider kinds of JSON to be handled with the efficiency SingleStore is known for. If you generate JSON data in your application, whether it's conventional dense JSON or sparse JSON, you can be confident that SingleStore stores it and queries it efficiently and reliably, at whatever scale you need.

![ICYMI: Highlights From SingleStore [r]evolution 2022](https://images.contentstack.io/v3/assets/bltac01ee6daa3a1e14/bltc79945d23b29f430/6433d90735650a110764412c/featured_icymi-highlights-from-revolution-2022.png?height=187&disable=upscale&auto=webp)