For years, data teams have been chasing the same vision: A single system that powers real-time transactions, analytics and lakehouse storage without ETL jobs, laggy pipelines or duplicate infrastructure.

A clean, unified stack. Fewer moving parts. Instant insights.

But that future always feels one roadmap away.

So teams settle. They stitch together transactional databases, analytical warehouses and a cloud data lake. Data lakes are designed as centralized repositories that store large volumes of raw, non-relational data from different types of sources.

They build pipelines to sync everything. They layer on scripts, data governance tools and workarounds to hold it all together.

And over time, the cost of maintaining this patchwork grows — quietly, then all at once.

The hidden price of piecing things together

Running separate databases and systems for OLTP, OLAP and data lake storage might feel manageable — until it’s not:

Dashboards lag behind reality because batch jobs fail overnight. Operational reporting suffers when data is not up-to-date or unified.

Models train on stale data because the pipeline didn’t sync in time.

Security reviews stall because no one knows which copy is the “real” one.

A critical customer event gets lost in transfer before it can trigger a response.

Understanding customer behavior in real time is crucial for timely responses and improved customer satisfaction.Every silo adds latency, complexity and overhead. And every workaround delays your team’s ability to act on what’s happening right now.

What if seamless integration made it all just work together?

Now imagine a different approach:

One platform that handles both transactions and analytics.

One copy of your data, immediately available to every workload.

One set of tools for security, storage, and data governance.

No ETL. No syncing. No lag.

Seamless integration of different data sources and workloads is achieved, allowing data to flow effortlessly across the platform.

This kind of architecture isn’t theoretical. It’s real, and it’s running today.

Here’s what it looks like in practice:

A new record is instantly visible in both your operational apps and analytical dashboards.

Hot data is automatically buffered into cloud storage behind the scenes, no jobs to build or maintain.

A single AI database engine handles millisecond lookups and large-scale scans, made possible by an advanced storage layer that supports schema enforcement for data quality and reliability.

Role-based access and audit logs apply everywhere, whether your data is in memory, on disk or in the cloud.

Everything works together natively, instantly and without the glue code, thanks to integration and seamless operation across the platform.

Real time finally feels real

The era of waiting on data pipelines and nightly jobs is over. Your team can now act the instant something happens. With every event captured and immediately available, you gain the confidence to respond in the moment rather than playing catch-up.

When all your data lives in one place and one engine powers every workload:

Fraud detection kicks in as it happens, not hours later.

Personalization logic reflects a customer’s last click, not yesterday’s behavior, by analyzing user and customer behavior in real time to deliver more relevant recommendations.

Reports update instantly, without anyone waiting on a pipeline run. Real-time data analysis and analytic workloads, including SQL queries, help teams uncover insights and enforce data quality.

Machine learning teams and analysts work off the same fresh dataset, with no version mismatches, so users benefit from consistent, high-quality data for their analytic tasks.

Real-time stops being a buzzword and becomes your default.

Smarter, faster: AI and machine learning in real time

Artificial intelligence and machine learning aren’t just buzzwords, they’re the engines powering the next wave of real-time data management. By embedding AI and ML directly into your data platform, you unlock the ability to analyze vast amounts of data including unstructured data, raw sensor data and semi-structured logs, as it streams in from multiple sources. This means you can make data-driven decisions in the moment, not after the fact.

Modern data lake architecture, AI databases and data warehouses are evolving into centralized repositories that do more than just store data. With AI and ML, these platforms become dynamic environments for big data processing and advanced analytics. Data scientists and business analysts can run complex queries, similarity searches and text mining on both historical data and live feeds, uncovering actionable insights that drive business value.

AI and ML algorithms play a crucial role in enforcing data quality. They automatically flag anomalies, validate schema and support data versioning, ensuring that every piece of data stored is accurate, consistent and ready for analysis. This is especially important when dealing with sensitive data or integrating information from IoT devices, mobile apps and other business applications. With robust access controls and transaction support, you can trust your data is secure and compliant — even as it flows through different storage layers.

The business value and wins add up

This isn’t just an architecture upgrade, it’s a shift in how teams operate:

Speed. Transactions and analytics happen in the same moment, on the same data.

Simplicity. Fewer systems to manage, fewer pipelines to maintain.

Savings. Lower storage, lower licensing, less operational drag and the low cost of storage and infrastructure.

Clarity. A single governance model gives you confidence in your compliance.

The platform also helps businesses stay ahead of market trends by providing timely insights.

You act faster, spend less and move with more trust in your data.

One platform. All your diverse workloads. Now.

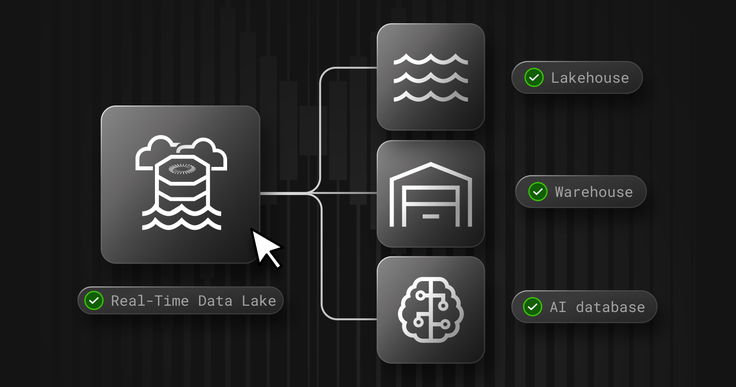

SingleStore isn’t just a unified transactional-analytical engine. It’s a full-blown AI database, too. OLTP, analytics and lakehouse use cases all run in a single platform. We support data lakehouses, data warehouses and AI databases, enabling diverse workloads and big data analytics across structured, semi structured and unstructured data.

Inline ingestion makes every write immediately queryable, while smart buffering moves data seamlessly between memory and cloud storage. The system design supports storing data of different types, ensuring flexibility for a wide range of data types and data structures.

One engine, one metadata layer and one security model span every workload. For example, organizations use SingleStore to power real-time analytics on eCommerce transactions, process images for AI-driven medical diagnostics and manage diverse analytic workloads across multiple data types. These examples highlight the flexibility and power of its data structures for modern data management needs.

Stop duct-taping systems together. Start building on a platform that outperforms.

Frequently Asked Questions