It's a data-driven world, and anyone who is building or using applications expects lightning-fast, context-aware search experiences. That’s why Model Content Protocols (MCPs) and hybrid search arose. Whether users are hunting for a specific keyword like “dog food quality” or something more abstract like “Turkish delight,” behind the scenes, modern search systems need to deliver both precision and semantic depth, offering results that are not only accurate but also contextually relevant.

In creating search-based applications, developers have typically relied on Elasticsearch, built on Apache Lucene. And Elasticsearch performed well, at least until data sizes grew exceptionally large and until developers needed more than just full-text or vector search.In scenarios where hybrid needs blending with keyword search, vector similarity, and structured filters, the limitations of Elasticsearch begin to show.

In a comparison of Elasticsearch vs. SingleStore, we noted that the architecture of Elasticsearch isn't designed for advanced analytics, real-time hybrid queries, or unified operations across structured and unstructured data, leading to scalability challenges, increased operational overhead, and fragmented architectures.

In this blog, we’ll examine different scenarios on how SingleStore’s hybrid search capability reduces the limitations encountered and faced in ElasticSearch. We'll walk through a hands-on experience using a set of Amazon product reviews, share real code examples, and examine core queries, full-text, vector, and hybrid searches.

The tale of two architectures

The traditional search: Elasticsearch

As we mentioned, Elastic is a proven search engine built on top of Apache Lucene. It uses inverted indexing structure for text and keyword matches. Apart from these features, Elastic is also great at:

Flexibility: Each document stored as JSON; fields tokenized, analyzed. Flexible schema, but mapping complexity increases when introducing non-text fields.

Performance: To get good performance, many parts of Lucene's inverted indices and vector indices must be in memory or warm disk-caches. However, large datasets push cost in RAM/disk IO.

Scalability: Elasticsearch scales by horizontal sharding; replication ensures redundancy. But performance depends heavily on how shards, replicas, and node roles are configured.

However, even with all these features and characteristics, Elastic still suffers from performance degradations at various levels.

Elasticsearch’s strongest game is keyword match and full text search, however, it starts to break down when you add SQL style joins, range filters and analytics. It often needs denormalized schemas or external pipelines.

Adding vector support in Elasticsearch means defining dense_vector fields, setting dimensions, similarity metric, indexing options, then ensuring documents are updated properly. This can create mapping mismatches that often lead to silent failures.

When data grows large, maintaining shards and replicas requires careful provisioning. And that means scaling to support heavy vector + filter + text workloads is nontrivial.

Elasticsearch often needs document refresh or index commit to make newly inserted/updated data visible for search. Embedding updates in particular tend to suffer from lag or non-visibility until refresh.

SingleStore: The modern era of search

So how can developers counter such issues? Enter SingleStore. SingleStore reimagines search for the age of real-time data and AI by unifying text search, vector search, and structured SQL in a single database engine. SingleStore offers a high-performance architecture that simplifies development and scales seamlessly.

Here’s how SingleStore bridges the gaps left by traditional search engines:

SingleStore’s rowstore and column store capability lets you handle both fast point lookups and large analytics without the need for a separate system.

The vectors come with built in similarity functions, hence there is no need for separate mappings, dimensions setting or hidden pitfalls.

SingleStore extends standard SQL with full-text search (MATCH ... AGAINST) and vector functions. This allows you to run hybrid queries that combine text search, vector similarity, joins, filters, and aggregations in a single SQL statement – thus eliminating the need for separate pipelines or re-ranking steps.

With no need for multiple tools, your infrastructure footprint is reduced. The results are faster, efficient and lower cost of ownership.

In summary, if you want real-time search that handles both vector and text — plus filters, joins, and analytics — SingleStore delivers what Elastic often can only approximate. In experiments to test vector + filters + embedding visibility, every time Elasticsearch failed (or required fiddling) SingleStore handled it cleanly. That makes a big difference in developer time, reliability, and latency, especially when building production systems.

In the following sections, we’ll examine these concepts with a real-world data set and understand how the two search capabilities differ.

Prerequisites

In the example use case below, certain prerequisites will help you follow along:

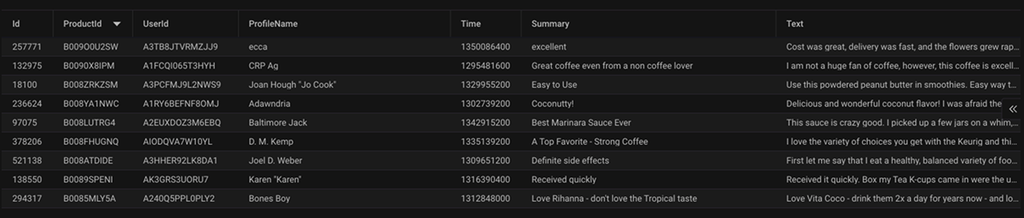

Amazon Product Reviews dataset from Kaggle. For this blog, we have used a smaller structure of the complete dataset for faster embeddings.

A SingleStore Helios account. You can create a free new workspace on Helios using the Helios signup page. Once set, you can load the dataset directly and then connect your Python application from Jupiter notebook directly on Helios. A single place for all your storage.

Once set up, you’ll have a table structure similar to this schema:

Now you’re ready to test the use case.

Search implementation

To test the complete use case, we’ll perform three different kinds of search operations around approximately 10K of data. For this blog, we have tested, full-text search, vector search and hybrid search.

Full-text search is a classic keyword or phrase search. This matches words in the query to words in documents using inverted indexes and BM25 scoring.

Vector search is a semantic search powered by embeddings. Instead of exact keywords, it finds reviews with similar meaning to the query.

Hybrid search combines both approaches, blending keyword precision with semantic recall.

SingleStore implementation

Full-Text search: finds reviews with exact or close word matches using MATCH … AGAINST.

1#%%2# ==========================================================3# 3. Full-Text Search4# ==========================================================5def full_text_search(query, topk=5):6 start = time.time()7 cur.execute("""8 SELECT Id, Summary, MATCH(Text) AGAINST (%s) AS score9 FROM amazon_reviews10 WHERE MATCH(Text) AGAINST (%s)11 ORDER BY score DESC12 LIMIT %s13 """, (query, query, topk))14 results = cur.fetchall()15 print(f"🔎 Full-text search in {time.time() - start:.4f} sec")16 return results17 18# Example queries19print(full_text_search("dog food"))20print(full_text_search("cough medicine"))

Vector search finds semantically similar reviews by computing dot product similarity between embeddings.

1# ==========================================================2# 4. Vector Search3# ==========================================================4def vector_search(query, topk=5):5 qvec = embed(query)6 vec_json = str(qvec).replace("'", '"') # JSON array string7 start = time.time()8 cur.execute("""9 SELECT Id, Summary,10 DOT_PRODUCT(embedding, %s) AS score11 FROM amazon_reviews12 WHERE embedding IS NOT NULL13 ORDER BY score DESC14 LIMIT %s15 """, (vec_json, topk))16 results = cur.fetchall()17 print(f"🔎 Vector search in {time.time() - start:.4f} sec")18 return results19 20# Example queries21print(vector_search("healthy pet food"))22print(vector_search("candy"))

Hybrid search blends text and vector scores in SQL, giving full control over weighting

1 def hybrid_search(query, topk=5, text_weight=0.5, vector_weight=0.5):2 qvec = embed(query)3 vec_json = str(qvec).replace("'", '"')4 start = time.time()5 cur.execute(f"""6 SELECT Id, Summary,7 COALESCE(MATCH(Text) AGAINST (%s), 0) AS text_score,8 COALESCE(DOT_PRODUCT(embedding, %s), 0) AS vector_score,9 (%s * COALESCE(MATCH(Text) AGAINST (%s), 0) +10 %s * COALESCE(DOT_PRODUCT(embedding, %s), 0)) AS hybrid_score11 FROM amazon_reviews12 WHERE embedding IS NOT NULL13 ORDER BY hybrid_score DESC14 LIMIT %s15 """, (query, vec_json, text_weight, query, vector_weight, vec_json, topk))16 results = cur.fetchall()17 print(f"⚡ Hybrid search (fast) in {time.time() - start:.4f} sec")18 return results19 20# Example21print(hybrid_search("dog food"))

Once these implementations are done, we can explore how performing search with similar keywords on SingleStore performs with better accuracy and efficiency.

Results and observations

After running the above code with the same dataset and search queries, we observed consistent and significant improvements in the results with SingleStore. The table below outlines the performance across multiple runs on the same dataset.

Category | Explanation | Execution time |

Full-text search | More precise results, simpler query. | Execution time in test: 0.38s. |

Vector search | Native vector column and built-in similarity. | Execution time in test: 0.37s. |

Hybrid search | Clear, tunable, supports normalization. | Execution time in test: 0.35s |

Observations

Unlike engines that may vary the results depending on refresh cycles or index updates, SingleStore returned consistent rankings across repeated queries.

Even when we scaled the dataset from ~10K to ~100K records, execution times remained under a second, showing linear scaling without complex tuning.

All three search types were expressed in straightforward SQL. No custom DSL, schema tweaks, or refresh calls were required.

By adjusting weights in SQL (e.g., 0.7*vector_score + 0.3*text_score), we can tune the balance between semantic and keyword relevance with full transparency.

Newly inserted rows were immediately searchable in both full-text and vector queries without needing manual refreshes or re-indexing.

Because text, vector, and hybrid search all run inside the same engine, there’s no need for multiple pipelines or extra services, reducing infra overhead.

These results demonstrate that while Elasticsearch is still a strong keyword search engine, SingleStore delivers a unified, real-time, and lower-latency platform for modern hybrid search.

A real-world example: Why SingleStore wins

A customer faced rapid growth where thousands of publications and customers started to add hundreds of titles. Their existing stack, with plans to add Elastic, wasn’t working well. They started to face issues like:

Poor search performance.

Infrastructure limitations

Scaling and unpredictable cost limits.

Why SingleStore was a better choice

With SingleStore, the above issues were easily addressed, because ingleStore unifies transactional and analytic workloads and supports search use cases without adding separate systems.

With SingleStore, the customer:

Experienced dramatic gains in speed and accuracy (up to 70x)

Was able to process millions of rows and sustain ~120K queries per minute for real-time workloads

Enjoyed up to 35% acceleration in analytics/dashboard performance

Lowered their cost and operational overhead.

The customer summed it up as follows: “SingleStore will seriously decrease our infrastructure complexity, allowing us to move faster and with more confidence. This all started with search, but now it's far bigger than that.”

This real-world story matches what we observed in our 10K experiment: SingleStore provided faster, more consistent full-text, vector and hybrid queries, and a simpler developer experience.

Where Elasticsearch required DSL gymnastics, mappings, refresh calls and script scoring, SingleStore enabled the customer to express hybrid search and normalization directly in one SQL query and get immediate, reproducible results.

When searching in your application is only one piece of a broader real-time data problem, SingleStore is a great solution.

Conclusion

By bringing vectors, full-text and SQL all together in one engine, SingleStore. makes hybrid queries simple, fast, and consistent. This helps in lowering engineering overheads and makes product behavior predictable.

If you’re looking for search that’s semantic, precise, and real-time and you’d rather express that logic in SQL than stitch together multiple services, SingleStore is worth a short proof-of-concept.

To learn more about SingleStore, visit our official documentation. You can also register for a SingleStore webinar and learn about the latest concepts and technologies through hands-on experience.

Frequently Asked Questions