While early efforts like ELIZA simulated basic conversation, Large Language Models (LLMs) have undergone a remarkable transformation, fueled by ever-increasing computational power and massive datasets. This has empowered them to not only understand and generate human-like text, but also perform a wide range of tasks — from summarizing information to creating different creative text formats. This progress paves the way for the exploration of even more sophisticated models, like those incorporating multiple modalities.

Today, let’s learn about multimodal models, and how they are revolutionizing the world of gen AI.

What are multimodal models?

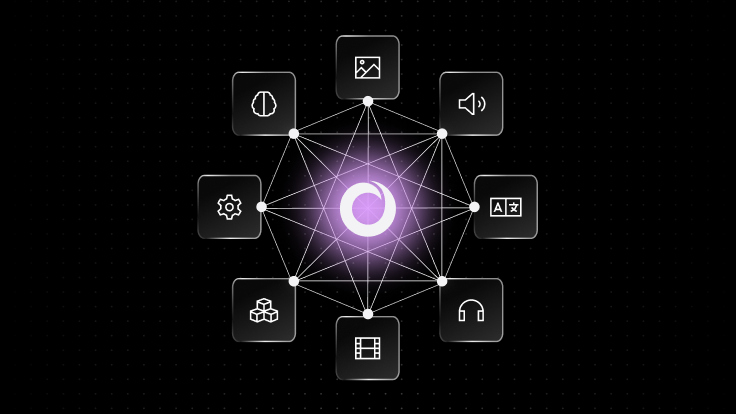

In the context of machine learning and artificial intelligence, multimodal models are systems designed to understand, interpret or generate information from multiple types of data inputs or "modalities." These modalities can include text, images, audio, video and sometimes even other sensory data like touch or smell.

The key characteristic of multimodal models is their ability to process and correlate information across these different types of data, enabling them to perform tasks that would be challenging or impossible for models limited to a single modality.

.png?width=1024&disable=upscale&auto=webp)

Context plays a vital role in LLMs when a user comes with any query. While traditional AI models often struggle with the limitations of single data types, adding context becomes a little challenging — resulting in an output with less accuracy. Multimodal models address this by considering data from multiple sources including text, images, audio and video. This broader perspective allows for more context and self-learning. And, this enhanced comprehension leads to improved accuracy, better decision-making and the ability to tackle tasks that were previously challenging.

Examples of multimodal models

How do multimodal models work?

Multimodal models allow for more nuanced understanding and richer interaction capabilities than unimodal systems. By mimicking the human ability to combine information from various senses/types, multimodal models can achieve superior retrieval and performance in tasks like language translation, content recommendation, autonomous navigation and healthcare diagnostics, making technology more intuitive, accessible and effective for a wide range of applications.

Let’s see how multimodal models work in this comprehensive tutorial, considering multimodal models from Gemini: gemini-pro and gemini-pro-vision.

You need a Notebook to understand and carry out this hands-on tutorial. Notebooks are interactive web-based tools that allow users to create and share documents containing live code, visualizations and narrative text.

Consider using the SingleStore Notebook feature here. If you haven't already, activate your free SingleStore trial to get started. Create a new Notebook and start with the tutorial.

First, install the Google AI Python SDK.

1

!pip install -q -U google-generativeai

Define a function to_markdown that converts given text into Markdown format.

1

import pathlib2

import textwrap3

4

import google.generativeai as genai5

6

from IPython.display import display7

from IPython.display import Markdown8

9

10

def to_markdown(text):11

text = text.replace('•', ' *')12

return Markdown(textwrap.indent(text, '> ', predicate=lambda _:13

True))

Mention/set the Google API key

1

os.environ['GOOGLE_API_KEY'] = ""2

genai.configure(api_key=os.environ['GOOGLE_API_KEY'])

List the models

1

for m in genai.list_models():2

if 'generateContent' in m.supported_generation_methods:3

print(m.name)

Let’s use the ‘gemini-pro’ model with a query to see the response

1

model = genai.GenerativeModel('gemini-pro')2

response = model.generate_content("what are large language models?")3

to_markdown(response.text)

Now, let’s download an image available on the internet — you can mention the link of any image you like.

1

!curl -o image.jpg2

https://statusneo.com/wp-content/uploads/2023/02/MicrosoftTeams-image53

51ad57e01403f080a9df51975ac40b6efba82553c323a742b42b1c71c1e45f1.jpg

Let’s display that image

1

import PIL.Image2

3

img = PIL.Image.open('image.jpg')4

Img

Let’s use the gemini-pro-vision model to describe what the image is all about.

1

model = genai.GenerativeModel('gemini-pro-vision')2

response = model.generate_content(img)3

4

to_markdown(response.text)

You should see the model explaining the shared image properly. You can find the complete Notebook code here.

You can also check out this on-demand webinar, "LangChain for multimodal apps: Chat with text/image data," where we showcase an advanced multimodal retrieval system using SingleStore, GPT-4V and LangChain with CLIP.

This article has traversed the evolution of AI models, highlighted the groundbreaking capabilities of multimodal systems, and provided a practical tutorial on leveraging these models through gemini-pro" and gemini-pro-vision. Through this exploration, we see that multimodal models are not just an advancement in technology; they are revolutionizing how we interact with LLMs.