In an era where milliseconds matter, real-time AI applications like fraud detection, personalization, predictive maintenance and anomaly detection depend not just on model quality, but on how quickly data flows into your system. Speed of ingestion is the foundation — without it, your “real-time” AI becomes reactive at best and irrelevant at worst.

Data ingestion fundamentals

Data ingestion is the process of moving information from many different sources into a system where it can be used — whether that’s a data lake, data warehouse or another central store. It’s the first step in turning raw inputs into something meaningful, bringing together everything from IoT sensor readings to social media streams into one place.

A strong ingestion strategy breaks down silos, ensures all relevant data is available in a consistent format and sets the stage for advanced analytics, machine learning and AI. The goal isn’t just to collect data — it’s to make it immediately usable for decision-making and innovation.

How to build a real-time ingestion pipeline

Real-time ingestion isn’t just “streaming instead of batching.” It’s an architecture designed to move data from event to insight in milliseconds. Here’s how to set it up:

1. Choose the right ingestion method for your sources

Event-driven streaming.

Use Kafka and Flink, or Redpanda for clickstream, IoT and transaction data that needs to be consumed continuously.Change data capture (CDC).

For transactional databases, use tools like Striim, or StreamSets to replicate inserts, updates and deletes in near real time — and plan for schema monitoring.Webhooks and event buses.

For SaaS integrations, push data via webhooks or an internal event bus instead of polling APIs.

2. Persist first, process in-flight

Land raw events as quickly as possible in a system that can store and query immediately.

Apply transformations and enrichments in-flight with Flink — or Spark Structured Streaming — rather than deferring to nightly ETL.

3. Eliminate unnecessary hops

Use a single platform that handles ingestion, storage and queries instead of chaining queues, warehouses and analytics engines.

Reduce copies of the same dataset — the more duplication, the higher the latency and maintenance burden.

4. Make it fault-tolerant from day one

Retain data in replayable queues so you can reprocess after outages.

Implement automated monitoring to catch stalled pipelines within seconds.

Keep ingestion stateless where possible to simplify recovery.

Gotchas that break real-time ingestion

Many teams discover too late that their infrastructure isn’t built for real-time responsiveness. Even if the AI models are fast and accurate, slow ingestion delays predictions. Here’s where things commonly go wrong:

Event streams aren’t kept up-to-date.

Whether it’s clickstream data, sensor output, or financial transactions, if data arrives in bulk instead of continuously, you’re already behind.Change data capture (CDC) pipelines are fragile.

CDC can be a useful way to replicate database changes in near real time, but the pipelines often break when schemas change, source systems lag or network disruptions occur.Traditional pipelines introduce latency.

ETL jobs, data lakes and downstream batch processes create unavoidable time gaps between data collection and AI inference.Schema drift in CDC pipelines.

A renamed column or altered datatype can silently stop replication. Automate schema change alerts and apply version control to your data contracts.Back-pressure buildup.

If downstream consumers fall behind, queues grow and latency spikes. Track consumer lag and scale consumers automatically under load.Checkpointing failures.

In streaming ETL frameworks, missing or corrupt checkpoints can cause double-processing or data loss after restarts. Verify checkpoints regularly and store them in a durable system.Clock drift between systems.

Misaligned timestamps can cause events to be processed out of order, breaking time-series analytics and model accuracy. Use NTP or similar services to keep clocks in sync.Security blind spots.

Internal event streams often skip encryption and access control. Treat streaming data with the same security posture as stored data to avoid compliance gaps.

Real-time AI isn’t just about “fast models” — it’s about ensuring the right data gets to the right place instantly.

Real-time AI needs real-time data ingestion

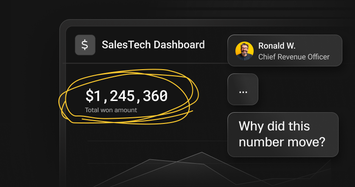

Consider fraud detection in fintech. Every millisecond counts when determining whether to block a transaction. If your ingestion system lags even a few tenths of a second, the fraudulent payment goes through. Or take predictive maintenance in manufacturing. If your ingestion pipeline processes sensor anomalies after the fact, you’re not preventing breakdowns — you’re reporting them. In both scenarios, real-time streaming data and data streams are essential for real time data processing, enabling immediate detection and response.

In both cases, you need streaming ingestion, low-latency writes and concurrent reads and writes to power decisions the moment data arrives.

The ideal ingestion system must:

Accept high-velocity data from multiple sources

Capture and process data points as they arrive to support immediate analytics

Process and persist it immediately

Make it queryable without waiting for batch jobs

Why incremental fixes don’t deliver real-time AI

Some teams try patching the problem with additional layers: Kafka for streaming, Redis for fast reads and a warehouse for analysis. But this often creates more fragmentation, not less. Now you’re syncing systems, managing connectors and increasing failure points. Data ingestion tools and data integration platforms are often introduced to address these challenges, but they require significant development expertise and the involvement of data engineers to design, build and maintain robust pipelines.

The truth is: real-time AI needs a unified ingestion and query engine — something that handles streaming input, fast storage and immediate analytics in the same place.

Building a foundation for speed and scale with unstructured data

To get ahead of these problems, design your architecture around the following principles. A robust data pipeline is essential for moving transformed data into the target system, like a data lake or data warehouse, ensuring data is efficiently processed and delivered for analytics and reporting.

Ingest once, serve many.

Stream data into a system that can handle both operational and analytical workloads simultaneously.Eliminate glue code.

Use a platform that minimizes data hops and avoids the need to ETL between services.Choose a real-time database for concurrent writes and queries.

Look for a system that can write and query data concurrently at high speeds and scale with your data as it grows.

A well-designed data lake architecture supports storing data in a variety of data formats, including non-relational data, enabling organizations to store data in its native form for big data processing, operational reporting and leveraging historical data for comprehensive analytics.

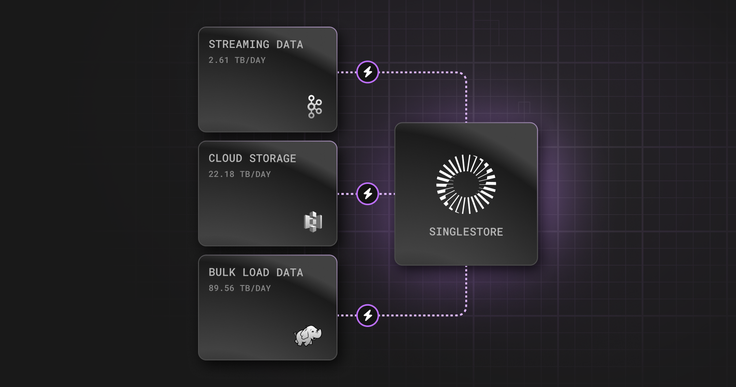

A platform built for real-time ingestion

This is where SingleStore shines. It combines streaming ingestion, in-memory processing and distributed SQL in a single engine. That means data arrives, is queryable instantly and powers AI models in real time — without lag, ETL or operational drag. You can see this in action with our example SingleStore notebook that shows how to ingest data from Kafka directly into SingleStore for instant querying.

Whether you're blocking fraudulent charges, tailoring in-app experiences, or catching anomalies before they become outages, real-time AI starts with real-time ingestion. SingleStore is built for that.

Frequently Asked Questions

.png?width=24&disable=upscale&auto=webp)