In this blog, we show how you can create an external function in SingleStoreDB that calls out to OpenAI to get a vector embedding for a text string. You can use this function to get vectors directly in SQL to easily update a vector column with a vector embedding in a single SQL UPDATE statement. This style of programming may be more convenient than doing all interaction with your vector embedding API (whether OpenAI or some other API) through your application software.

We've had a number of customers and prospects ask how to get vector embeddings from SQL so they could update existing tables with a vector column containing an embedding for an existing text column. Of course, you can always query the data out of SingleStoreDB, get the vector, then write it back. But it can be more convenient to do it all from SQL.

Fortunately, SingleStoreDB supports external functions. Using this facility, you can write an external function that takes a text argument, returning an embedding for that text.

Background on External Functions

External functions are scalar- or table-valued functions which are implemented in an external process outside the SingleStoreDB service process. SingleStoreDB calls out to this service process to get results. Rows are sent, and results are returned in batches to minimize the cross-process call overhead. External functions are available in both SingleStoreDB Self-Managed and Singlestore Helios deployments. If you want to use external functions for Singlestore Helios, open a support ticket and the support team can enable it for you.

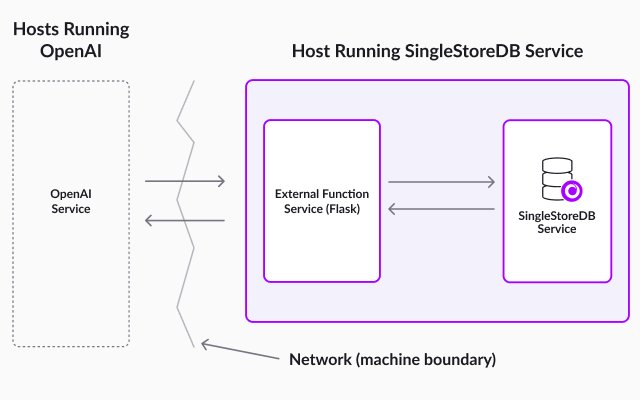

The illustration in Figure 1 shows how SingleStoreDB, an external function service process and OpenAI interact in the example we give later in this blog. In our example, we use a Flask-based web application hosting external functions, and SingleStoreDB is running self-hosted.

If you want to create a similar configuration in Singlestore Helios, your external function service process(es) will need to run on another host, not one of the SingleStoreDB servers. The illustration is simplified because it doesn't show the distributed nature of SingleStoreDB — but rather shows one SingleStoreDB host. For a self-hosted SingleStoreDB system running on more than one host, an external function process would be required on each host to run using "localhost" the way our example does.

A get_openai_embedding External Function

In Appendix 1, there's a complete Python script that implements an HTTP Server that implements get_openai_embedding. Start that server as described in the appendix. Then, at your SingleStore prompt, run:

create database db;

use db;

set global enable_external_functions = on;

CREATE EXTERNAL FUNCTION get_openai_embedding(string text) RETURNS text

AS REMOTE SERVICE "http://localhost:5000/functions/get_openai_embedding"

FORMAT JSON;

For example:

SELECT get_openai_embedding("blueberry");returns a string containing a JSON array of numbers of length 1536. Here's an abridged version of the result:

[-0.006673640571534634, -0.02640238218009472, …, -0.01984163001179695]

Using get_openai_embedding

Now, you can use this function like so:

create table words(word text, vector blob);

insert words(word) values

("breakfast"),("lunch"),("dinner"),("ballgame");

select * from words;

+-----------+--------+

| word | vector |

+-----------+--------+

| dinner | NULL |

| breakfast | NULL |

| lunch | NULL |

| ballgame | NULL |

+-----------+--------+

Next, update the vector field of every row in a single SQL statement:

update words set vector=json_array_pack(get_openai_embedding(word));Imagine you have a set of real data in a database and don't want to have to round-trip it through an application to get embeddings for text fields in your database. This approach lets you do that, potentially simplifying your programming task. The json_array_pack function is required to convert the JSON array of numbers (in string format), returned by the get_openai_embedding function into a packed binary representation. This is the representation that our vector similarity functions (e.g., dot_product) need as input.

An interesting experiment to check these vectors is to run this SQL statement to get the dot product of every vector with every other vector, ranking by the resulting cosine similarity score:

select w1.word, w2.word,

dot_product(w1.vector, w2.vector) :> float as score

from words w1, words w2

where w1.word <= w2.word

order by score desc;

+-----------+-----------+----------+

| word | word | score |

+-----------+-----------+----------+

| ballgame | ballgame | 1 |

| lunch | lunch | 1 |

| breakfast | breakfast | 1 |

| dinner | dinner | 1 |

| dinner | lunch | 0.937369 |

| breakfast | lunch | 0.917673 |

| breakfast | dinner | 0.907877 |

| ballgame | dinner | 0.850541 |

| ballgame | lunch | 0.842423 |

| ballgame | breakfast | 0.816232 |

+-----------+-----------+----------+As expected, each word is a perfect match with itself. Not surprisingly, "breakfast", "lunch" and "dinner" are close matches with each other, and "ballgame" is less close to any of them because it's not a meal. And intuitively, "dinner" is closer to "lunch" than "breakfast". This shows the fascinating power of the OpenAI LLM to encode the meaning of words and larger chunks of text into vector embeddings. For a deeper treatment of how to do semantic search with OpenAI embeddings in SingleStoreDB, see our blog on that topic [Aur23].

Another interesting thing about this example is that it shows the power of SQL applied to vector data. This query uses a "cross join" — a cross product of two tables, and applies dot_product in an expression that's a function of rows from two tables. This will require significantly more lines of code with specialty vector database systems (SVDBs) [Han23] that don't support SQL.

Making This Work in Singlestore Helios

To create the ability to have an external function service in Singlestore Helios (as mentioned previously), you'll have to open a support ticket to request external functions to be enabled, and potentially open network ports depending on where your external function service resides. Currently, it's not possible to set enable_external_functions yourself in the cloud service.

You'll need to run the external function code, preferably in the same cloud availability zone as your SingleStoreDB workspace. This can be done in a VM you control or in a lambda function service, depending on your needs. The http://localhost:5000/functions path used in Appendix 1 will have to be changed to a path that does not use localhost.

Conclusion

The example we've given here shows how you can get vector embeddings for text data in your database using an external function. This simplifies programming, compared to getting embeddings using an application program and updating the database.

To make the external function service ready for production, you'll want to use a production-ready web server rather than the one used in Appendix 1. For example, consider an ASGI web server like Uvicorn.

The example in Appendix 1 uses batching to communicate with OpenAI. When running a SELECT, a batch of rows from SingleStoreDB is sent to the external function process, and that batch is in turn sent to OpenAI. So only one round trip to OpenAI is needed for each batch.

The default batch size is 500 rows — see the documentation on external functions for more on batch processing. However, a standard UPDATE statement doesn't use batch processing. You can break work down into multiple steps, using one SELECT to a temp table, and then UPDATE using the temp table, to reduce round trips while updating many rows. Details of this approach are given in Appendix 2.

And, the OpenAI service is remote. You can make calls to the get_openai_embedding function faster by (in addition to batching) running your application on a cloud platform close to OpenAI servers. The OpenAI embeddings APIs are throttled, which will limit throughput.

For very fast interactive response time, it may make sense to use another embedding provider besides OpenAI, such as LLaMA, which you can host entirely local to your SingleStoreDB service processes. Then, there will only be one cross-process call to process an external function (instead of two), and the data will not have to traverse even the local network — let alone the internet.

How will you use external functions, vector data [WVD] or both in your SingleStoreDB applications?

References

- [Aur23] P. Aurora, E. Hanson, C. Malassa, AI-Powered Semantic Search in SingleStoreDB, April, 2023.

- [Han23] E. Hanson, A. Comet, Why Your Vector Database Should Not be a Vector Database, April, 2023.

- [WVD] Working with Vector Data, SingleStoreDB Documentation.

Appendix 1: HTTP Server Implementing get_openai_embedding

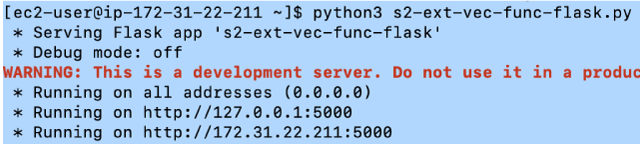

Below the line at the end of this appendix is python code to implement an HTTP server that can serve requests from SingleStoreDB for calls to the get_openai_embedding UDF. Place this code in a file s2-ext-vec-func-flask.py and make it executable with chmod 755 s2-ext-vec-func-flask.py.

To run the examples in this blog as well as our semantic search blog, first use pip3 to install these packages:

pip3 install mysql.connector openai matplotlib plotly pandas scipy

pip3 install scikit-learn requests

pip3 install FlaskThen, run s2-ext-vec-func-flask.py on the same machine as your SingleStoreDB node(s) at the command line as follows:

./s2-ext-vec-func-flask.py

============

#!/usr/bin/env python3

"""SingleStoreDB External Function Demo."""

import os

import json

import openai

from flask import Flask, request

from openai.embeddings_utils import get_embeddings

# You'll need an OpenAI API key to run this example. You can

# get one at https://platform.openai.com/account/api-keys

# For small data sets, the cost is nominal, but be aware that the

# cost can become significant for larger data sets.

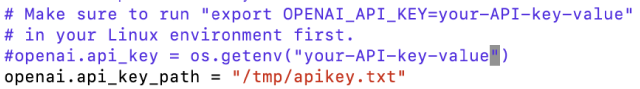

# Get the OpenAI API Key.

# Make sure to run "export OPENAI_API_KEY=your-API-key-value"

# in your Linux environment first.

openai.api_key = os.getenv("OPENAI_API_KEY")

# Instantiate a Flask application

app = Flask(__name__)

@app.route('/functions/get_openai_embedding', methods=['POST'])

def get_openai_embedding():

"""

Run this at the SingleStoreDB SQL prompt to create the function:

CREATE EXTERNAL FUNCTION get_openai_embedding(string text) RETURNS text

AS REMOTE SERVICE

"http://localhost:5000/functions/get_openai_embedding"

FORMAT JSON;

"""

# Batching used -- makes one round-trip to OpenAI per batch of rows.

row_ids, args = [], []

for row_id, data in request.json['data']:

row_ids.append(row_id)

args.append(data)

res = map(json.dumps, get_embeddings(args, "text-embedding-ada-002"))

return dict(data=list(zip(row_ids, res)))

if __name__ =='__main__':

#

# Run a test server.

#

# Note that this is *not* a production-quality server. For more

information

# about deploying in production, see the following URL:

#

# https://flask.palletsprojects.com/en/2.3.x/deploying/

#

app.run()Appendix 2: Getting Batched Update Using a Scratch Table

The UPDATE statement doesn't benefit from batching with external functions, but SELECT does. You can break the problem down into steps to get the benefit of batching while doing an update. For example, instead of:

update words set vector=json_array_pack(get_openai_embedding(word));you can do:

create temporary table scratch as

select word, json_array_pack(get_openai_embedding(word)) as vector

from words;And then do:

update words join scratch on words.word = scratch.word

set vector = scratch.vector;You can run this much faster if the words table is large.

Appendix 3: Additional Considerations for Singlestore Helios

To make the examples in this blog work on Singlestore Helios, you can also do the following:

- Run the Flask app on a public IP so that it can be accessed from other machines (your cloud), you need to bind it to 0.0.0.0 or the specific public IP of the machine where it's running. For this, modify the below section of Python script `s2-ext-vec-func-flask.py` as follows:

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)- Note: Before doing this, make sure you understand the security implications. Binding to 0.0.0.0 will allow connections from any IP address, so you should ensure you have appropriate firewall rules in place and potentially also use HTTPS to encrypt the traffic. Never expose a debug server to the public internet.

- Ensure whitelisting your cloud host on the firewall of the server where your external function service resides, or disable the firewall (sudo systemctl stop firewalld).

- If required switch SELinux to Permissive mode (setenforce 0). After debugging, don’t forget to set it in enforcement mode.

- After all the changes run the python script and you will see the output below running Flask on all address 0.0.0.0

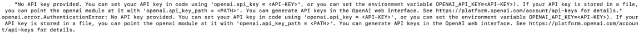

- Optional: If you face these cloud and server side errors related to your Openai key then use the below method to fix it

Method to fix:

create a .txt file (ex: /tmp/apikey.txt) and put openai key in that file

Make the following changes in Python script, save it and run the script