This webinar concludes our three-part series on cloud data migration. In this session, Domenic Ravita actually breaks down the steps of actually doing the migration, including all the key things you have to do to prepare and guard against problems. Domenic then demonstrates part of the actual data migration process, using SingleStore tools to move data into Singlestore Helios.

About This Webinar Series

This is the third part of a three-part series. First, we had a session on migration strategies; broad-brush business considerations to think about, beyond just the technical lift and shift strategy or data migration. And the business decisions and business strategy to guide you as to picking what sorts of workloads you will migrate, as well as the different kinds of new application architectures you might take advantage of. And then, last week, we got down to the next layer, talked about ensuring a successful migration to the cloud of apps and databases in general.

In today’s webinar we’ll talk about more of the actual migration process itself. We’ll go into a little bit of more detail in terms of what to consider with the data definition language, queries, DML, that sort of thing. And then I’ll cover one aspect of that, which is the change data capture (CDC) or replication from Oracle to SingleStore, and show you what that looks like.

Database Migration Best Practices

I’ll talk about the process itself here in terms of what to look at, the basic steps that you are going to be performing, what are the key considerations in each of those steps. Then we’ll get into more specifics of what a migration to Singlestore Helios looks like and then I’ll give some customer story examples to wrap up, and we’ll follow this with a Q and A.

So the process that we covered in the last session has mainly these key steps, that we’re going to set the goals based on that business strategy in terms of timelines, which applications and databases are going to move, considering what types of deployment you’re going to have, and what’s the target environment.

We do all this because it’s not just the database that’s being moved, it’s the whole ecosystem around the database. So connections for integration processes, the ETL processes from your operational database to your data warehouse or analytic stores. As well as where you’ve got multiple applications sharing a database, understanding what that is and what the new environment is going to look like.

Whether that’s going to be a similar application use of the database or if you’re going to redefine or refactor or modernize that application, perhaps splitting a monolith application to microservices for instance. Then that’s going to have an effect on what your data management design is going to be.

So just drilling into step three there, for migration, that’s the part we’ll cover in today’s session.

Within that, you’re going to look at specific assessment of the workloads and you’re going to look, what sorts of datasets to return, what tables are hit, what’s the frequency, the concurrency of these? This will help in capacity sizing, it’ll also help in understanding which functions of the source database are being used in terms of features and capabilities, such as stored procedures.

And once you do that assessment, and there are automated tools to do this, you’ll look at planning that schema migration of step two. And the schema migration involves the table definitions but also all sorts of other objects in the database that you’ll be prepared to adapt. Some will be one-to-one – it depends on your type of migration – and then the initial load, and then continuing to that replication with a CDC process.

So, let’s take the first one here in terms of the assessment of the workloads, what you want to consider here. And when you think about best practices for this, you want to think about how are applications using the database? Are they using it in a shared manner? And then specifically, what are they using from the database? So for instance, you may need to determine what SQL queries, for instance, are executed by which application so that you can do the sequencing of the migration appropriately.

So finding that dependency, first of which applications use the database, and then more fine-grained, what are the objects, use of stored procedures, etc., and tables. And then finally, what are the specific queries?

So one strategy or tactic, I would say, that’s helpful in understanding that use by the application is to find a way to intercept the SQL queries that are being executed by those applications. So, if this is a job application, if you wrapped the connection object when the Java connection object is being created, and also the object for the dynamic SQL, then you can use this kind of wrapper to collect metrics and information and capture the query itself so that you have specific data on how applications use the database, which tables, which queries, how often they’re fired.

And you could do this in other languages as long as you’re… it’s for that client library, whether it’s an ODBC, JDBC, et cetera. This technique helps to build a data set as you assess the workload to get really precise information about what the queries are executed and what objects.

And then secondly, when you have that data, you’ll find that the next thing that you want to do is to look at the table dependencies. So again, if this is an operational database that you’re migrating, then it’s typical that you might have an ETL process that keys off of one-to-many tables to replicate that data into a historical store, a data warehouse, a data mart, etc. And so, understanding what those export and/or ETL processes are, on which tables they depend, is fairly key here.

These are just two examples of the kinds of things that you want to look at for the workload. And of course with the first one, once you have the queries, and you can see what tables are involved, you can get runtime performance on that, you can have a baseline for what you want to see in the target system, once the application and the database and the supporting system pipelines have been migrated.

So now let’s talk a little bit about schema migration. And there’s a lot involved in schema migration because we’re talking about all the different objects in the database, the tables, integrity constraints, indexes, et cetera. But we could sort of group this into a couple of broad areas, the first being the data definition language (DDL) and getting a mapping from your source database to your target.

In previous sessions in this series we talked about the migration type or database type, whether it’s homogeneous or heterogeneous. Homogeneous is like for like, you’re migrating from a relational database source to the same version even, of a relational database, just in some other location – in a cloud environment or some other data center.

That’s fairly straightforward and simple. Often the database itself provides out-of-the box tools for that sort of a migration and replication. When you’re moving from a relational database to another relational database, but from a different vendor, that’s when you’re going to have a more of an impedance mismatch of some of the implementations of DDL, for instance.

You’ll find many of the same constructs because they’re both relational databases. But despite decades of so-called standards, there’s going to be variation… there are going to be some specific things for each vendor database. So for instance, if you’re migrating from Oracle to SingleStore, as far as data types, you’ll find a pretty close match from an Oracle varchar2 to a SingleStore varchar, from a nvarchar2 to SingleStore varchar, from an Oracle FLOAT to SingleStore decimal.

Those are just some examples, and we have a white paper that describes this in detail and gives you those mappings, such that you can use automated tools to do much of this schema migration as far as the data types and the table definitions, etc.

After the data types, the next thing that you would be looking at would be queries and the data manipulation language (DML). So, when you look at queries, you’ll be thinking, “Okay, what are the different sorts of query structures? What are the operators in the expression language of my source, and how do they map to the target?”

So, how can I rewrite the queries from the source to be valid in the target source? Again, you’re going to look at the particular syntax around, for instance, outer join syntax, do I have recursive queries? Again, just using Oracle as an example, SingleStore has a fairly clear correspondence of those capabilities from relational data stores like Oracle, PostgreSQL, mySQL, etc., and SingleStore.

If your source is a mySQL database, you’ll find that the client libraries can be used directly in SingleStore because our client bindings are SingleStore wire protocol compliant. So you can use basically any driver, any client driver, from SingleStore in the hundreds that are available throughout every programming language into SingleStore, so that simplifies a little bit of some of your testing in that particular case.

The third thing I’d point out here is that while you may be migrating from a relational database to another relational database, and you may still consider this, or you should consider this, a heterogeneous move, because the architecture of the source databases often, almost always these days, a legacy single-node type of database. Meaning that it’s built on a disk-first architecture, it’s meant to scale vertically, meaning a single machine to get more performance, you scale up, you get a bigger hardware with more CPU processors.

And when you’re coming to SingleStore, you can run it as a single node, but the power of SingleStore is that it’s a scale-out distributed database, such that you can grow the database to the size of your growing dataset with simply adding nodes to the SingleStore cluster. SingleStore is distributed by default, or distributed native you might say, and that’s what also is one of the attributes that makes it a good cloud-native database with Singlestore Helios, and that allows us to elastically scale that cluster for Singlestore Helios, scale up and down, and I’ll come back to that in a moment.

But as part of that, when you think about the mapping, the structure of a source relational to a target like Singlestore Helios, you’re mapping a single-node database to a distributed one. So there’s a few extra things to consider, like the sharding of data, or some people call this partitioning or distributing the data, across the cluster.

The benefit of that is that you get resiliency, in the case of node failures you don’t lose data, but you also get to leverage the processing power of multiple machines in parallel. And this helps when you’re doing things like real-time raw data ingestion from Kafka pipelines and other sources like that.

This is described in more detail in our white paper, which I’ll point out in just a moment. So once you’ve got those things mapped, and you may be using an automated tool to do some of that schema mapping, you’ll have to think about the initial load.

And this, depending on your initial dataset, could take some amount of time, a significant amount of time, just when you consider the size of the source dataset, the network link across which data must move, what’s the bandwidth of that link?

And so if you’re planning a migration cut-over, like over a weekend time, you’ll want to estimate based on those things. And what’s the initial load going to be, and by when will that initial data load complete, such that you can plan the new transactions start of the replicating of the new data. And also when you’re doing the load, what other sorts of data prep needs to happen in terms of ensuring that the integrity constraints and other things like that are working correctly. I’ll touch a little bit about how we address that through parallelism.

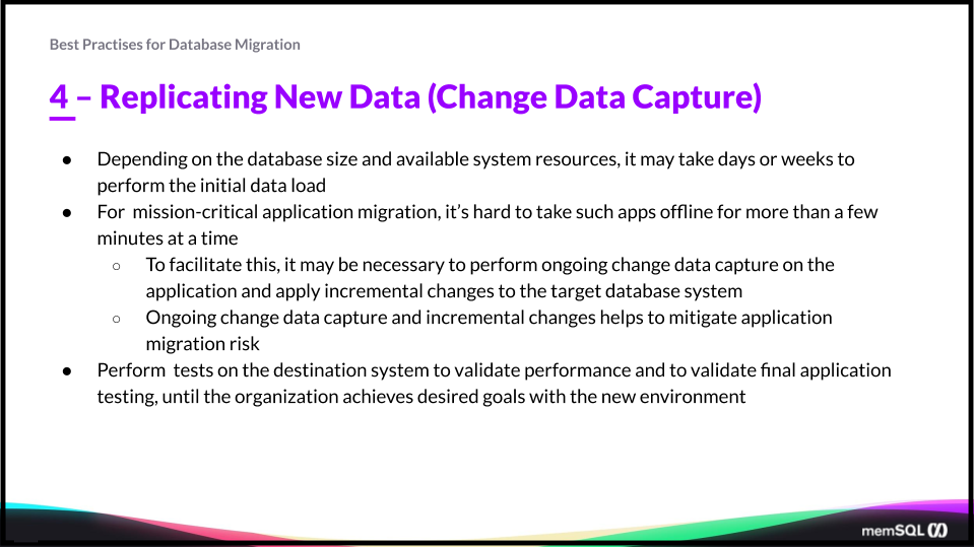

So finally, once that initial load is done, then you’re looking to see how you can keep up with new transactions that are written to the source database. So you’re replicating, you’ve got a snapshot for the initial load, and now you’re replicating from a point in time, doing what’s called change data capture (CDC).

As the data is written you want the minimal latency possible to move and replicate – copy – that data to the target system. And there are various tools on the market to do this. Generally you want this to have certain capabilities such as, you should expect some sort of failure. And so you need sort of some checkpoint in here so you don’t have to start from the very beginning.

Again, this could be tens, hundreds of terabytes in size if this is an operational database, or an analytic database, it’s going to have more data if it’s been used over time. Or, if it’s multiple databases, each may be a small amount of data, but together you have got a lot in process at the same time. So you want to have your replication such that it can be done in parallel and you have checkpointing to restart from the point of failure rather than the very beginning.

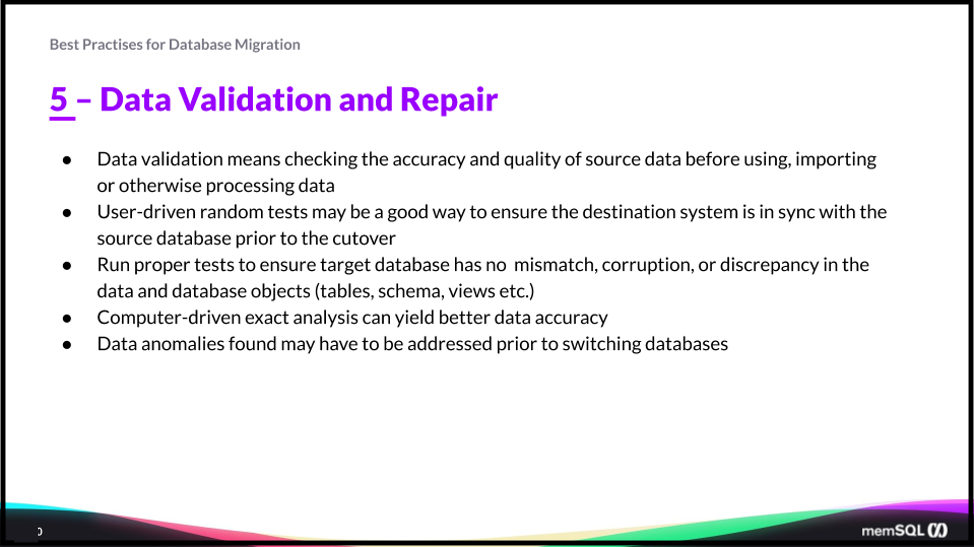

And then finally, data validation and repair. With these different objects in a source and a target, there’s room for error here, and you’ve got to have a way to automatically validate and run tests against the data that are valid, you want to think about automating that. And as much as possible in testing, doing your initial load, you want to validate data there before starting to replicate; as data’s replicating you’re going to have a series of ongoing validations to ensure that you’re not mismatching or your logic is not incorrect.

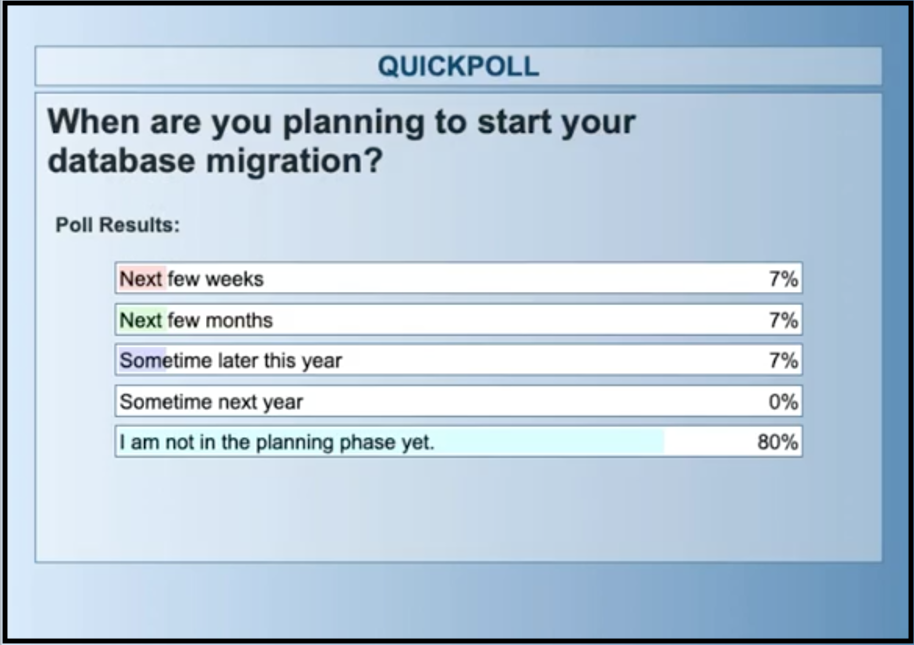

Let’s go to our first polling question. You’re attending this webinar probably because you’ve got cloud database migration on your mind. Tell us when you are planning a database migration. Coming up in the next few weeks, in which case you would have done a lot of this planning and testing already. Or maybe in the next few months, and you’re trying to find the right target database. Or maybe it’s later in this year or next year, and maybe you’re still in the migration strategy, business planning effort.

Okay. Most of you are not in the planning phase yet, so you’re looking to maybe see what’s possible. You might be looking to see what target databases are available, and what you might be able to do there. We hope you take a look at what you might do with Singlestore Helios in the Cloud.

We’ll talk about moving workloads to Singlestore Helios. Singlestore Helios is SingleStore’s database as a service. Singlestore Helios is, at its essence, the same code base is as SingleStore, self-managed, as we call it, but it’s provided as a service such that you don’t have to do any of your own work on the infrastructure management. It takes all the non-differentiated heavy lifting away, such that you can focus just on the definition of your data.

Like SingleStore self-managed, Singlestore Helios provides a way to do multiple workloads of analytics with transaction simultaneously on the same database or multiple databases in a Singlestore Helios cluster. You can run Singlestore Helios in all the major cloud providers, AWS, GCP, Azure is coming soon, in multiple regions.

Looking at some of the key considerations in moving to Singlestore Helios … I mentioned before this identification of the database type source and target. There’s multiple permutations of what to consider like for like with homogenous. Although it may be a relational to relational database, as the examples I just provided with, say, Oracle and SingleStore, there are still some details to be aware of.

The white paper we provide gives a lot of that guidance on the mapping. There are things that you can take advantage of in Singlestore Helios that are just not available or not as accessible in Oracle. Again, that’s things like the combination of large, transactional workloads simultaneously with the analytical workloads.

Next thing is the application architecture. I mentioned this earlier. Are you moving? Is your application architecture going to stay the same? Most likely it’s going to change in some way, because when these migrations are done for a business, typically they’re making selections in the application portfolio for new replacement apps, SaaS applications often, to replace on-prem applications.

A product life cycle management, a PLM system on prem, often is not carried on into the cloud environment. Some SaaS cloud provider is used, but you still have the integrations that need to be done. There could be analytical databases that need to pull from that PLM system, but now they’re going to be in the cloud environment.

Looking at, what are the selections and the application portfolio or the application rationalization, as many people may think about it? As to what that means for the database. Then for any particular app, if it’s going to be refactored from a monolith to microservices-based, what does that mean for the database?

Our view in terms of SingleStore for use in microservices architectures is that you can have a level of independence of the service to the database, yet keep the infrastructure as simple as possible. We live in an era where it’s really convenient to spin up lots of different databases really easily, but even when they’re in the cloud, those are more pieces of infrastructure that you now have to manage the the life cycle of.

As much as possible you should try to minimize the amount of cloud infrastructure that you have to manage. Not just in the number of instances of database, but also the variety of types. Our view of purpose-built databases and microservices is that you can have the best of purpose-built, which is support for different data structures and data access methods, such as having a document store, and geospatial data, full-text search with relational, with transactions, analytics, all living together without having to have the complexity of your application to communicate with different types of databases, different instances, to get that work done.

Previously in the industry, and part of the reason why purpose-built databases caught on, is that they provided a flexibility to start simply, such as document database, and then grow and expand quickly. Now we, as an industry, have gone to the extreme of that where there’s an explosion of different types of sources. To get a handle on that complexity, we’ve got to simplify and bring that back in.

SingleStore provides all of those functions I just described in a single service, and Singlestore Helios does that as well. You can still choose to segment by different instances of databases in the Singlestore Helios cluster, yet you have the same database type, and you can handle these different types of workloads. For a microservices-based architecture, it’s giving you the best of both worlds; the best of the purpose-built polyglot persistence NoSQL sorts of capabilities and scale out, but with the benefits of robust ANSI SQL and relational joints.

Finally, the third point here is optimizing the migration. As I said, with huge datasets, the business needs continuity during that cutover time. You’ve got to maintain service availability during the cutover. The data needs to be consistent, and the time itself needs to be minimized on that cutover.

Let me give a run through some of the advantages of moving to Singlestore Helios. As I said, it’s a fully managed cloud database as a service, and, as you would expect, you can elastically scale up a SingleStore cluster and scale it down.

Scaling down is also maybe even perhaps the more important thing, because if you have a cyclical or seasonal type of business like retail, then there’ll be a peak towards the end of the year, typically Thanksgiving, Christmas, holiday seasons. That infrastructure, you’ll want to be able to match to the demand without having to have full peak load provisioned for the whole year. Of course cloud computing, this is one of the major benefits of it. But, your database has to be able to take advantage of that.

Singlestore Helios does that through, again, its distributed nature. If you’re interested in how this works exactly, go to the SingleStore YouTube channel. You’ll see quick tutorials on how to spin up a Singlestore Helios cluster and resize it. The example there shows growing the cluster. Then once that’s done, it rebalances the data, but you can also size that cluster back down.

As I mentioned, it eliminates a lot of infrastructure and operations management. It gives you some predictability in costs. With Singlestore Helios, without going into the full pricing of Singlestore Helios, basically our pricing is structured around units or nodes. Those nodes, or resources, are described by computing resources in terms of how many CPUs, how much RAM. Eight virtual CPUs, 64 gigabytes of RAM. It’s based on your data growth and your data usage patterns. That’s the only thing you need to be concerned about in terms of cost. That makes doing financial forecasts for applications a lot simpler.

Again, since Singlestore Helios is provided in multiple cloud providers like AWS, GCP, and soon Azure, in multiple regions, you can co-locate or have a network proximity of your chosen Singlestore Helios cluster to your target application environment. Such that you can minimize any costs across in terms of data ingress and egress.

When you bring data into Singlestore Helios, you just get the one cost, so the Singlestore Helios unit cost. From your own application, your cloud-hosted application or your datacenter-hosted application that’s bringing data into Amazon, or Azure, or GCP, you may incur some costs from those providers, but from us, it’s very simple. It’s just the per-unit, based on the node.

Singlestore Helios is reliable out of the box in that it’s a high availability (HA) deployment, such that if any one node fails, you’re not losing data. Data gets replicated to another leaf node. Leaf nodes in Singlestore Helios are data nodes that store data. On every leaf node, there’s one-to-many partitions, so you’re guaranteed to have a copy of that data on an another machine. Most of this would be fairly under the covers for you. You should not be experiencing any sort of slowdown in your queries, provided that your data is distributed.

Next, freedom without sprawl. What I’m talking about is, Singlestore Helios allows you to, as I said earlier, combine multiple types of workloads, to do mixed workloads of transactions and analytics, and different types of data structures like a document. If you’re creating a product catalog and you’re querying that, or you have orders structured as documents, with Singlestore Helios as well as SingleStore, you can store these documents, such as the order, in the raw JSON format, directly in SingleStore. We have an index into that such that you can query and make JSON queries part of your normal application logic.

In that way, SingleStore can act as a document or key-value store in the same way that MongoDB or AWS DocumentDB or other types of document databases do. But, we’re more than that, in that you’re not just limited to that one kind of use case. You can add relational queries. A typical use case here is storing the raw JSON but then selecting particular parts of the nested array to put into relational or table columns, because those can be queried as a columnstore in SingleStore. That has the advantage of compression.

There’s a lot of advantages in doing these together, relational with document, for instance, or relational with full-text search. Again, you can have this freedom of the different workloads, but without the sprawl of having to set up a document database and then separately a relational database to handle the same use case.

Then, finally, I would say a major advantage of Singlestore Helios is that it provides a career path for existing DBAs of legacy single-node databases. There’s a lot of similarity in the basic fundamental aspects of a database management system, but what’s different is that, with SingleStore, you get a lot of what was previously confined to the NoSQL types of data stores, key-value stores, and document stores, for instance. But, you’d get those capabilities in a distributed nature right in SingleStore. It’s, in some ways, the ideal path from a traditional single-node relational database career and experience into a cloud-native operational and distributed database like SingleStore.

So what I would like to do at this point is show you a simple demonstration of how this works. I’d refer you to our whitepaper for more details about what I discussed earlier from migrating Oracle to Singlestore Helios, and you’ll find it there by navigating from our homepage to resources, Whitepapers and Oracle and SingleStore migration. So with that, let me switch screens for just a moment.

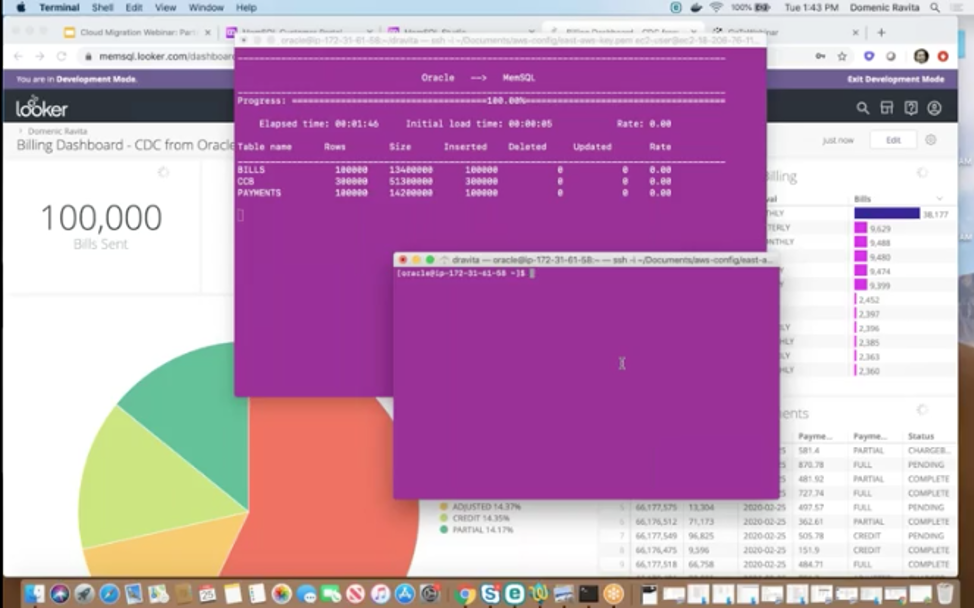

And what I’m going to do. So I’ve got a Telnet session here or SSH session into a machine running an Amazon where I’ve got an Oracle database, and I’m going to run those two steps I just described. Basically the initial data load, and then I’m going to start the replication process. Once that replication process, with SingleStore Replication, is running, then I’ll start inserting data, new data into the source of Oracle database. And you’re going to see that written to my target. And I’ll show a dashboard to make this easy to visualize and the data that’s being written.

So the data here is billing data for a utility billing system. I’ve got new bills and payments and clearance notifications that come through that source database. I’ll show you the schema in just a moment. So what I’ll do is I’ll start my initial snapshot. I’ve got one more procedure to run here.

Okay. So that’s complete and now I’ll start my application process. And so from my source system, Oracle, we’re writing to Singlestore Helios. And you see it’s written 100,000 rows to the BILLS table, 300,000 to CCB and 100,000 to PAYMENTS.

So now let’s take a look at our view there, and we can take a look at the database. It’s writing to a database called UTILITY. And if I refresh this, I’ll see that I will have some data here in those three tables… it gave me the count there, but I can quickly count the rows, see what I got there.

So I also have a dashboard, which I’ll show it here and that confirms that we’re going against the same database that I just showed you the query for. So at this point I’ve got my snapshot of the initial data for the bills, payments, and clearance notices.

So what I’ll do now is start another process that’s going to write data into this source Oracle database. And we’ll see how quickly this happens. Again, I’m running from a machine image in Amazon US East. I’ve got my Singlestore Helios cluster also on Amazon US East. And so let’s run this to insert into these three tables here.

And as that runs, you’ll see SingleStore Replicate, which you’re seeing here, it’s giving us how many bytes per second are being written, how many rows are being inserted into each of these tables, and what’s our total run time in terms of the elapsed and the initial load time for that first snapshot data. So here you’ll see my dashboard’s refreshing. You start to see this data being written here into Singlestore Helios.

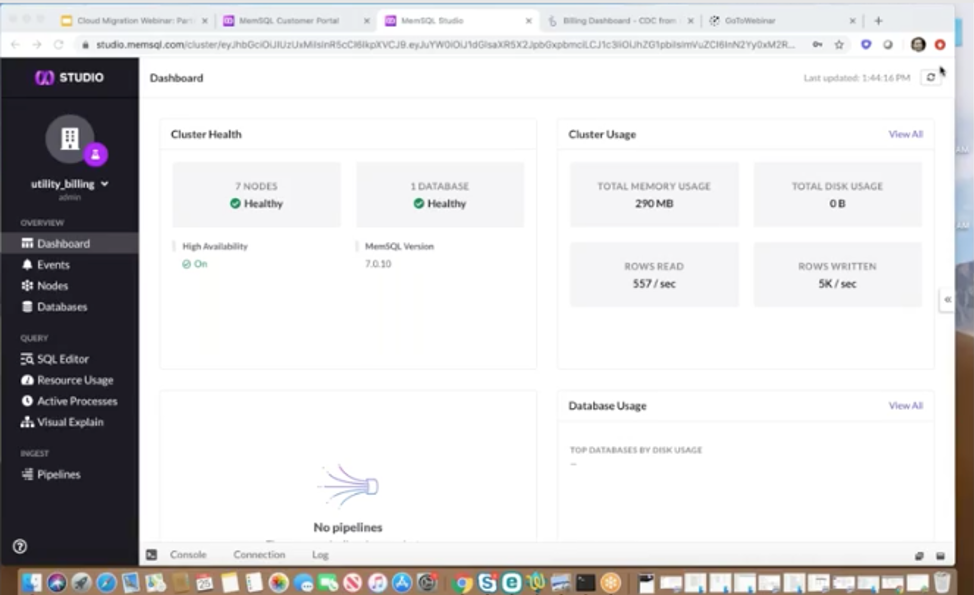

What we can do is use SingleStore Studio to view the data as it’s being written. So let’s first take a look at the dashboard and you can see we’re writing roughly anywhere from four to 10,000 rows per second against the database, which is a fairly small rate. We can get rates much higher than that in terms of tens of thousands or hundreds of thousands of rows written per second depending on the size. If they’re small sometimes it can be millions of rows in certain situations.

And let’s take a look at the schema here. And you’ll see that this data is increasing in size. As I write that data and SingleStore gives you this studio view such that you can see diagnostics on the process on the table as it’s happening, as data’s being written. Now you may notice that these three tables are columnstore tables. Columnstores are used for analytic queries and they have really superior performance for analytic queries and they compress the data quite a lot. And our column stores use a combination of memory and disk.

After some period of time this data and memory will persist, will write to disk, but even when it’s in memory, you’re guaranteed the durability and resilience. Again, because Singlestore Helios provides high availability by default, which you can see that redundancy through the partitions of the database through this view.

Case Studies

I’ll close out with a few example case studies. First… Well I think we’re running a little bit short on time, so I’m going to go directly to a few of these case studies here.

Singlestore Helios was first launched back in fall of last year and since then we’ve had several migrations. It’s been one of the fastest launches in terms of uptake of new customers that we’ve seen in the company’s history.

This is a migration from an existing SingleStore self-managed environment for a company called SSIMWAVE who provides video compression and acceleration for all sorts of online businesses, and their use case is around interactive analytics, ad hoc queries. And they want to be able to look at what are the analytics around how to optimally scale their video serving and their video compression.

And so they are a real-time business and they need operational analytics on this data. Just to draw an analogy, if you’re watching Netflix and you have a jitter or a pause, or you’re on Prime Video and you have a pause for any of these online services, it’s an immediately customer-impacting kind of customer-facing scenario. And so this is a great example of a business that depends on Singlestore Helios and the Cloud to provide this reliability to deliver analytics for a customer facing application. Sort of what we call analytics live in SLA. So they’d been on Singlestore Helios now for several months and you see some of the quote here on why they’ve moved and the advantage of the time savings with Singlestore Helios.

A second example is Medaxion, and they were moving from… Initially they moved to SingleStore from MySQL instance, and then over to Singlestore Helios. And their business is providing information to anesthesiologists, and for them, again, it’s a customer facing scenario for operational analytics.

They’ve got to provide instantaneous analysis through Looker dashboards and ad hoc queries against this data. And Singlestore Helios is able to perform in this environment for an online SAS application essentially where every second counts in terms of looking at what’s the status of the records that Medaxion handles.

And then finally, I’ll close with this Thorn. They are a nonprofit that focuses on helping law enforcement agencies around the world identify trafficked children faster.

And if there’s any example that that shows that time criticality and the importance of it in the moment operational analytics, I think this is it, because most of this data that law enforcement needs exists in various silos or various systems or different agencies systems and what Thorn does is to unify and bring all of this together but do it a convenient searchable way.

So they’re taking the raw sources among which are posts online, which they feed through machine learning process to then land that processed data into Singlestore Helios such that their Spotlight application can allow instant in the moment searches by law enforcement to identify based on image recognition and matching if a child has been involved… is in a dangerous situation and correlating these different law enforcement records.

So those are three great examples of Singlestore Helios in real-time operational analytics scenarios that we thought we’d share with you. And with that I’ll close, and we’ll move to questions.

Q&A and Conclusion

How do I learn more about migration with SingleStore?

On our resources page you’ll find a couple of Whitepaper’s on migration. One about Oracle specifically and one more generally about migrating. Just navigate to the home page, go to Resources, Whitepapers. You’ll find that. Also there is a webinar we did back last year or before, the five reasons to switch – you can catch that recording. Of course you can also contact us directly and we’ll provide an email address here to share with you.

Where do I find more about SingleStore Replicate?

So that’s part of 7.0, so you’ll find all of our product documentation is online and SingleStore Replicate is part of the core product, so if you go to docs.singlestore.com, then you’ll find it there under the references.

Is there a charge for moving my data into Singlestore Helios?

There’s no ingress charge that you incur using SingleStoreDB Self-Managed or Singlestore Helios. Our pricing for Singlestore Helios is purely based on the unit cost as we call it. And the unit again is the computing resources for a node and it’s just the leaf node, it’s just the data node. So eight vCPUs, 64GB of RAM, that is a Singlestore Helios leaf node. All of that is just a unit. That’s the only charge.

But you may incur data charges from depending on where your source is, your application or other system for the data leaving, or the egress from that environment, if it’s a cloud environment. So not from us per se, but you may from your cloud provider.