SingleStore Universal Storage is a single kind of table that can support analytical and transactional workloads. For decades, many believed that you needed special databases and technology for analytical vs. transactional workloads. SingleStore has proved that wrong. With our 7.5 release, we're delivering the fourth installment of Universal Storage Technology, with support for multi-column keys, and making columnstore the default table type for new installations. We're proud to say all the major features of Universal Storage are done.

This is the last in a series of four articles that describe SingleStore's unique, patented Universal Storage feature. Read Part 1, Part 2 and Part 3 in the series to learn the whole story.

The main benefits of Universal Storage are:

(1) Improved Total Cost of Ownership (TCO), because data doesn't need to all fit in RAM for typical operations that involve upserts or need unique key enforcement. That reduces cost by not requiring servers with very large RAM, which can be expensive.

(2) Reduced complexity, because now you don't have to do some operations on a rowstore and then move data to a columnstore, delivering speed and performance not possible before.

(3) Improved analytics performance on large tables when combined with upserts, unique key enforcement, fast lookups, and other OLTP-style operations. That's because analytical queries can process hundreds of millions of rows per second on columnstore tables on just a single core, whereas peak performance per core on rowstores is about 20 million rows per second.

What's New in 7.5 for Universal Storage

In the 7.0, 7.1, and 7.3 releases, we evolved our columnstore table type to do things only OLTP-style storage structures were supposed to be able to do. That includes:

- subsegment access (fast seeking into columnstores* to retrieve one or a few records when their position is known)

- single-column hash indexes

- single-column unique indexes, constraints, and primary keys

- upsert support

- option to set columnstore as the default table type

*This new columnstore table type is what we call Universal Storage. But you'll still see it called "columnstore" in our syntax.

In 7.5 we now support:

- multi-column hash indexes

- multi-column uniqueness

- upserts to tables with multi-column unique keys

- columnstore as the default table type for new clusters by default

From a functional perspective, Universal Storage is done now. We don't expect to add more surface area to it. The performance is great, but we can make it even better, so stay tuned for advances on that front in future releases.

Examples

The examples covered in this article are:

- loading 28 million rows of data into the lineitem table for TPC-H

- moving that data into a table with a unique key on it that has two columns

- showing that this unique key is enforced

- seeking on this unique key and show how fast that is

The lineitem table is realistic, similar to one you might find to support ecommerce applications doing transactions, analytics, or both. Uniqueness enforcement is a common requirement for all kinds of applications. Having the database automatically enforce uniqueness is of course beneficial since it automatically ensures data integrity and frees the application developer from enforcing uniqueness themselves with application code.

Loading into the lineitem table

SingleStore Studio and the S2MS management console both allow you to easily load the TPC-H data set at the scale factor 100. Here, I just load part of the data, and stop loading it after I get to about 28 million rows of lineitem data, to make it a bit quicker to get started. I actually just copied part of the example that loads the TPC-H data from Studio.

To repeat this test yourself, run the following commands:

DROP DATABASE IF EXISTS tpch;

CREATE DATABASE tpch;

USE tpch;

CREATE TABLE `lineitem` (

`l_orderkey` bigint(11) NOT NULL,

`l_partkey` int(11) NOT NULL,

`l_suppkey` int(11) NOT NULL,

`l_linenumber` int(11) NOT NULL,

`l_quantity` decimal(15,2) NOT NULL,

`l_extendedprice` decimal(15,2) NOT NULL,

`l_discount` decimal(15,2) NOT NULL,

`l_tax` decimal(15,2) NOT NULL,

`l_returnflag` char(1) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_linestatus` char(1) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_shipdate` date NOT NULL,

`l_commitdate` date NOT NULL,

`l_receiptdate` date NOT NULL,

`l_shipinstruct` char(25) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_shipmode` char(10) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_comment` varchar(44) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

SHARD KEY (`l_orderkey`) USING CLUSTERED COLUMNSTORE

);

CREATE OR REPLACE PIPELINE tpch_100_lineitem

AS LOAD DATA S3 'memsql-tpch-dataset/sf_100/lineitem/'

config '{"region":"us-east-1"} '

SKIP DUPLICATE KEY ERRORS

INTO TABLE lineitem

FIELDS TERMINATED BY '|'

LINES TERMINATED BY '|\n';

START ALL PIPELINES;

Now, you can run this query on another session to see the progress of the pipeline:

SELECT

CONCAT(PIPELINE_NAME) AS pipelineId,

sub.BATCH_STATE AS lastBatchState,

IFNULL(sub.BATCH_ROWS_WRITTEN, 0) AS lastBatchRowsWritten

FROM (

SELECT

DATABASE_NAME,

PIPELINE_NAME,

BATCH_STATE,

BATCH_ROWS_WRITTEN,

ROW_NUMBER() OVER (

PARTITION BY DATABASE_NAME,

PIPELINE_NAME

) AS r

FROM

INFORMATION_SCHEMA.PIPELINES_BATCHES_METADATA

WHERE

BATCH_STATE NOT IN ('No Data', 'In Progress')

) sub

WHERE

r = 1 AND DATABASE_NAME='tpch'

ORDER BY pipelineId ASC;

When enough data has loaded (say about 28 million rows) you can stop the pipeline so it won't take as long and use as much space (there are about 600 million rows total available):

stop all pipelines;

Now check the size of the table:

select format(count(*), 0) from lineitem;

Create lineitem_uk table with a two-column unique key

Now, we'll create a different version of the table with a primary key:

set global default_table_type = 'columnstore';

create table `lineitem_uk` (

`l_orderkey` bigint(11) NOT NULL,

`l_partkey` int(11) NOT NULL,

`l_suppkey` int(11) NOT NULL,

`l_linenumber` int(11) NOT NULL,

`l_quantity` decimal(15,2) NOT NULL,

`l_extendedprice` decimal(15,2) NOT NULL,

`l_discount` decimal(15,2) NOT NULL,

`l_tax` decimal(15,2) NOT NULL,

`l_returnflag` char(1) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_linestatus` char(1) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_shipdate` date NOT NULL,

`l_commitdate` date NOT NULL,

`l_receiptdate` date NOT NULL,

`l_shipinstruct` char(25) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_shipmode` char(10) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_comment` varchar(44) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

shard key (l_orderkey),

sort key(l_shipdate),

primary key(l_orderkey, l_linenumber)

);

Now, run this to move the data over to lineitem_uk:

insert into lineitem_uk

select * from lineitem;

Verifying that Uniqueness is Enforced

First, let's verify that uniqueness is really being enforced. If you run this:

insert into lineitem_uk

select * from lineitem limit 1;

You'll get this error:ERROR 1062 ER_DUP_ENTRY: Leaf Error (172.17.0.2:3308): Duplicate entry '19805284-1' for key 'PRIMARY'

That's what we'd expect, since we know we already put that row into lineitem_uk once before.

Profiling Performance of a Multi-column Unique Key Lookup

Now, let's profile a seek query that looks up one row via a key. (If you stopped the loading early, you may need to verify that a row with l_orderkey = 364000000 is present; if it's not there, pick another l_orderkey value that is there, and modify the query below to use that value instead.) Every order had a l_linenumber 1 value, so that's always a safe choice. Now, run the single-row lookup:

select * from lineitem_uk

where l_orderkey = 364000000 and l_linenumber = 1;

For me, the profile took about 2 milliseconds to complete the ColumnStoreScan operator, which actually does a seek using the primary key index on (l_orderkey, l_linenumber). I'm using a new MacBook with 8 cores. You can profile in Studio by clicking on the "..." on the upper right of the SQL pane and selecting the profile option. If you mouse over the ColumnStoreScan operator, it will show how long it took. You'll have to run it twice to get accurate results

Seek time of 2 milliseconds into a columnstore table that's moderately wide, like this one, is quite fast, as expected (I showed that in one of my earlier Universal Storage blogs). This is OLTP-level speed on a columnstore.

Upsert on a Multi-column Key Columnstore

One of the most common issues customers have had in the past when ingesting data into SingleStore is that they have a streaming upsert workload, and they had to first load the data into a rowstore to accomplish that. They'd then migrate it to a columnstore after it stabilized, say after a few days, for long-term storage, to save money on RAM since rowstores must be in RAM. Queries would have to be modified to retrieve data from the columnstore and the rowstore and combine the information. This was extra work for developers.

Many of the upsert operations in these kinds of workloads depend on a multi-column unique key. Until SingleStore 7.5, you could not upsert to Universal Storage (columnstore) directly if you had a multi-column unique key. We're happy to say that now we can handle multi-column upsert workloads directly into a columnstore table. That means you no longer need to migrate data from a rowstore to a columnstore or perform a potentially non-trivial combination of data from two tables, a "hot" rowstore and a "long term archival" columnstore.

To help understand the examples that follow, a good reference on the upsert-style operations supported in SingleStore is here.

The most basic kind of conditional insert is not really an upsert at all, but rather INSERT IGNORE. As an example of this, suppose we have a staging table with two rows in it, created like so:

create table upsert_records as select * from lineitem_uk limit 2;

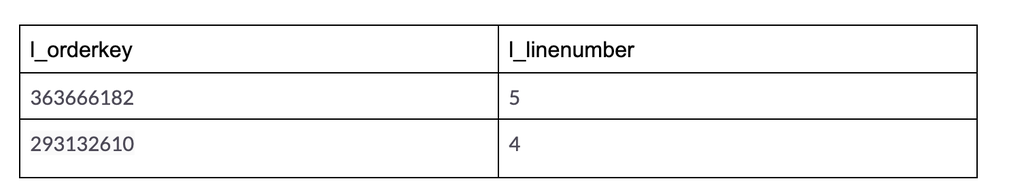

When I ran this, I got records with these keys:

I happen to know this l_orderkey is not present in my lineitem_uk since it is 1 greater than the maximum: 419382341

So let's change one of the upsert_records to have a new unique key:

update upsert_records set l_orderkey = 419382341

where l_orderkey = 293132610 and l_linenumber = 4;

Now, if we run this INSERT IGNORE, we see we get one new record, not two, since only one has a new key:

insert ignore into lineitem_uk

select * from upsert_records;

Now, for an actual upsert, let's change the l_quantity for one of the upsert_records:

update upsert_records set l_quantity = 500.00

where l_orderkey = 363666182 and l_linenumber = 5;

This INSERT ON DUPLICATE KEY UPDATE statement, if it sees a duplicate key, will update the l_quantity:

insert into lineitem_uk (

`l_orderkey`,

`l_partkey`,

`l_suppkey`,

`l_linenumber`,

`l_quantity`,

`l_extendedprice`,

`l_discount`,

`l_tax`,

`l_returnflag`,

`l_linestatus`,

`l_shipdate`,

`l_commitdate`,

`l_receiptdate`,

`l_shipinstruct`,

`l_shipmode`,

`l_comment`

)

select * from upsert_records

on duplicate key update

l_quantity = values(l_quantity);

This will then show that l_quantity for one of the lineitem_uk records got updated:

select s.*

from lineitem_uk s, upsert_records r

where s.l_orderkey = r.l_orderkey

and s.l_linenumber = r.l_linenumber;

All the other upsert-style commands and operations will also now work with columnstore targets, when the columnstore has the necessary multi-column unique key. These include REPLACE, and the REPLACE, IGNORE, SKIP DUPLICATE KEY, and ON DUPLICATE KEY UPDATE options of pipelines and LOAD DATA, as applicable.

The Future of Universal Storage

All major features of Universal Storage are done. The large majority of applications can use it as is in 7.5, benefiting from fast seeks, uniqueness enforcement, fast upserts, and fast analytics. We expect to make it even better in the future. For example, we may introduce an in-memory columnstore buffer cache, which could let us speed up seek times even more. Right now, we rely on the buffer cache of the file system to cache recently-accessed columnstore data in RAM. That works surprisingly well, but having our own buffer cache could reduce CPU cost of copying data during query execution.

In addition, we may add SELECT FOR UPDATE support for columnstores in a future release. Today, it works for rowstores but not columnstores. SELECT FOR UPDATE is useful if you want serializable isolation for selected OLTP-style updates.

Summary

Universal Storage Episode 4 concludes the saga of Universal Storage by adding multi-column key and full UPSERT support, and making columnstore the default table type. This is a major step forward in reducing TCO and simplifying application development by letting you do most everything with one table type -- columnstore.

With Universal Storage, you can reserve the use of rowstores for your highest performance OLTP-style operations. You can do almost everything you need with columnstores, including SQL statements that do small inserts, deletes, and updates, fast seeks, and both small and large aggregations.

Try SingleStore for free, using a Singlestore Helios trial or SingleStoreDB Self-Managed free edition.

Read Part 1, Part 2 and Part 3 in the series to learn the whole story behind SingleStore's patented Universal Storage feature.